IBM Cloud Pak for Integration V2021.2 Administration Questions and Answers

What protocol is used for secure communications between the IBM Cloud Pak for Integration module and any other capability modules installed in the cluster using the Platform Navigator?

Options:

SSL

HTTP

SSH

TLS

Answer:

BExplanation:

In IBM Cloud Pak for Integration (CP4I) v2021.2, secure communication between the Platform Navigator and other capability modules (such as API Connect, MQ, App Connect, and Event Streams) is essential to maintain data integrity and confidentiality.

The protocol used for secure communications between CP4I modules is Transport Layer Security (TLS).

Encryption: TLS encrypts data during transmission, preventing unauthorized access.

Authentication: TLS ensures that modules communicate securely by verifying identities using certificates.

Data Integrity: TLS protects data from tampering while in transit.

Industry Standard: TLS is the modern, secure successor to SSL and is widely adopted in enterprise security.

Why TLS is Used for Secure Communications in CP4I?By default, CP4I services use TLS 1.2 or higher, ensuring strong encryption for inter-service communication within the OpenShift cluster.

IBM Cloud Pak for Integration enforces TLS-based encryption for internal and external communications.

TLS provides a secure channel for communication between Platform Navigator and other CP4I components.

It is the recommended protocol over SSL due to security vulnerabilities in older SSL versions.

Why Answer D (TLS) is Correct?

A. SSL → Incorrect

SSL (Secure Sockets Layer) is an older protocol that has been deprecated due to security flaws.

CP4I uses TLS, which is the successor to SSL.

B. HTTP → Incorrect

HTTP is not secure for internal communication.

CP4I uses HTTPS (HTTP over TLS) for secure connections.

C. SSH → Incorrect

SSH (Secure Shell) is used for remote administration, not for service-to-service communication within CP4I.

CP4I services do not use SSH for inter-service communication.

Explanation of Incorrect Answers:

IBM Cloud Pak for Integration Security Guide

Transport Layer Security (TLS) in IBM Cloud Paks

IBM Platform Navigator Overview

TLS vs SSL Security Comparison

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

Select all that apply

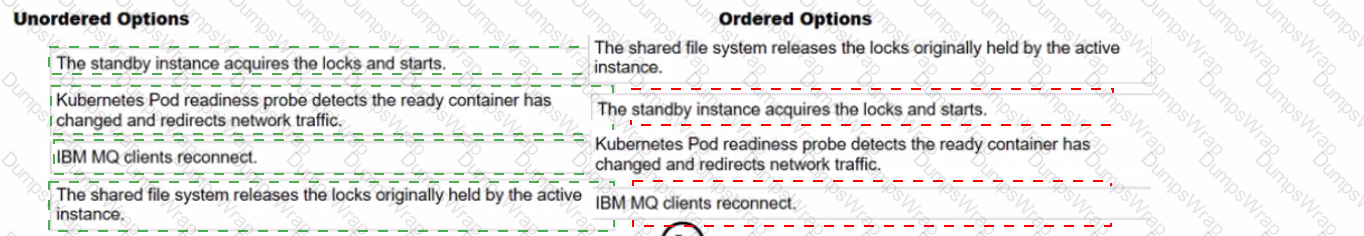

What is the correct sequence of steps of how a multi-instance queue manager failover occurs?

Options:

Answer:

Explanation:

1️⃣ The shared file system releases the locks originally held by the active instance.

When the active IBM MQ instance fails, the shared storage system releases the file locks, allowing the standby instance to take over.

2️⃣ The standby instance acquires the locks and starts.

The standby queue manager detects the failure, acquires the released locks, and promotes itself to the active instance.

3️⃣ Kubernetes Pod readiness probe detects the ready container has changed and redirects network traffic.

OpenShift/Kubernetes detects that the active instance has changed and updates its internal routing to redirect connections to the new active queue manager.

4️⃣ IBM MQ clients reconnect.

MQ clients reconnect to the new active queue manager using automatic reconnect mechanisms such as Client Auto Reconnect (MQCNO) or channel reconnect options.

Comprehensive Detailed Explanation:

In IBM Cloud Pak for Integration (CP4I) v2021.2, multi-instance queue managers use a shared file system to enable failover. The failover process follows these key steps:

Release of Locks:

The active queue manager holds a lock on shared storage.

When it fails, the lock is released, allowing the standby instance to take over.

Standby Becomes Active:

The standby queue manager acquires the released locks and promotes itself to active.

Network Traffic Redirection:

Kubernetes/OpenShift detects the pod status change using readiness probes and redirects network traffic to the new active instance.

Client Reconnection:

IBM MQ clients reconnect to the new active instance, ensuring minimal downtime.

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

IBM MQ Multi-Instance Queue Manager Failover

Kubernetes Readiness and Liveness Probes

IBM MQ High Availability Using Shared Storage

What type of storage is required by the API Connect Management subsystem?

Options:

NFS

RWX block storage

RWO block storage

GlusterFS

Answer:

CExplanation:

In IBM API Connect, which is part of IBM Cloud Pak for Integration (CP4I), the Management subsystem requires block storage with ReadWriteOnce (RWO) access mode.

The API Connect Management subsystem handles API lifecycle management, analytics, and policy enforcement.

It requires high-performance, low-latency storage, which is best provided by block storage.

The RWO (ReadWriteOnce) access mode ensures that each persistent volume (PV) is mounted by only one node at a time, preventing data corruption in a clustered environment.

IBM Cloud Block Storage

AWS EBS (Elastic Block Store)

Azure Managed Disks

VMware vSAN

Why "RWO Block Storage" is Required?Common Block Storage Options for API Connect on OpenShift:

Why the Other Options Are Incorrect?Option

Explanation

Correct?

A. NFS

❌ Incorrect – Network File System (NFS) is a shared file storage (RWX) and does not provide the low-latency performance needed for the Management subsystem.

❌

B. RWX block storage

❌ Incorrect – RWX (ReadWriteMany) block storage is not supported because it allows multiple nodes to mount the volume simultaneously, leading to data inconsistency for API Connect.

❌

D. GlusterFS

❌ Incorrect – GlusterFS is a distributed file system, which is not recommended for API Connect’s stateful, performance-sensitive components.

❌

Final Answer:✅ C. RWO block storage

IBM API Connect System Requirements

IBM Cloud Pak for Integration Storage Recommendations

Red Hat OpenShift Storage Documentation

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

How can OLM be triggered to start upgrading the IBM Cloud Pak for Integration Platform Navigator operator?

Options:

Navigate to the Installed Operators, select the Platform Navigator operator and click the Upgrade button on the Details page.

Navigate to the Installed Operators, select the Platform Navigator operator, select the operand instance, and select Upgrade from the Actions list.

Navigate to the Installed Operators, select the Platform Navigator operator and select the latest channel version on the Subscription tab.

Open the Platform Navigator web interface and select Update from the main menu. In IBM Cloud Pak for Integration (CP4I) v2021.2, the Operator Lifecycle Manager (OLM) manages operator upgrades in OpenShift. The IBM Cloud Pak Platform Navigator operator is updated through the OLM subscription mechanism, which controls how updates are applied.

Correct Answer: CTo trigger OLM to start upgrading the Platform Navigator operator, follow thes

Answer:

CWhat is the effect of creating a second medium size profile?

Options:

The first profile will be replaced by the second profile.

The second profile will be configured with a medium size.

The first profile will be re-configured with a medium size.

The second profile will be configured with a large size.

Answer:

BExplanation:

In IBM Cloud Pak for Integration (CP4I) v2021.2, profiles define the resource allocation and configuration settings for deployed services. When creating a second medium-size profile, the system will allocate the resources according to the medium-size specifications, without affecting the first profile.

IBM Cloud Pak for Integration supports multiple profiles, each with its own resource allocation.

When a second medium-size profile is created, it is independently assigned the medium-size configuration without modifying the existing profiles.

This allows multiple services to run with similar resource constraints but remain separately managed.

Why Option B is Correct:

A. The first profile will be replaced by the second profile. → ❌ Incorrect

Creating a new profile does not replace an existing profile; each profile is independent.

C. The first profile will be re-configured with a medium size. → ❌ Incorrect

The first profile remains unchanged. A second profile does not modify or reconfigure an existing one.

D. The second profile will be configured with a large size. → ❌ Incorrect

The second profile will retain the specified medium size and will not be automatically upgraded to a large size.

Explanation of Incorrect Answers:

IBM Cloud Pak for Integration Sizing and Profiles

Managing Profiles in IBM Cloud Pak for Integration

OpenShift Resource Allocation for CP4I

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

Which two OpenShift project names can be used for installing the Cloud Pak for Integration operator?

Options:

openshift-infra

openshift

default

cp4i

openshift-cp4i

Answer:

D, EExplanation:

When installing the Cloud Pak for Integration (CP4I) operator on OpenShift, administrators must select an appropriate OpenShift project (namespace).

IBM recommends using dedicated namespaces for CP4I installation to ensure proper isolation and resource management. The two commonly used namespaces are:

cp4i → A custom namespace that administrators often create specifically for CP4I components.

openshift-cp4i → A namespace prefixed with openshift-, often used in managed environments or to align with OpenShift conventions.

Both of these namespaces are valid for CP4I installation.

A. openshift-infra → ❌ Incorrect

The openshift-infra namespace is reserved for internal OpenShift infrastructure components (e.g., monitoring and networking).

It is not intended for application or operator installations.

B. openshift → ❌ Incorrect

The openshift namespace is a protected namespace used by OpenShift’s core services.

Installing CP4I in this namespace can cause conflicts and is not recommended.

C. default → ❌ Incorrect

The default namespace is a generic OpenShift project that lacks the necessary role-based access control (RBAC) configurations for CP4I.

Using this namespace can lead to security and permission issues.

Explanation of Incorrect Answers:

IBM Cloud Pak for Integration Installation Guide

OpenShift Namespace Best Practices

IBM Cloud Pak for Integration Operator Deployment

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

Which tool provides a tracing feature which allows visually following the journey of distributed transactions from the entry as an API. to invocation of an integration flow, into an MQ queue?

Options:

Asset Manager

Integration Designer

Confluent Platform

Operations Dashboard

Answer:

DExplanation:

In IBM Cloud Pak for Integration (CP4I) v2021.2, the Operations Dashboard provides a tracing feature that allows users to visually track distributed transactions as they move through various integration components. This includes tracing the flow from an API entry point, through an integration flow, and into an IBM MQ queue.

Provides end-to-end tracing of transactions across multiple integration components.

Helps users visualize the journey of a request, including API calls, integration flows, and MQ interactions.

Supports troubleshooting by identifying performance bottlenecks and errors.

Displays real-time monitoring data, including latency and errors across the system.

A. Asset Manager – This is used for managing, cataloging, and governing integration assets, but it does not provide tracing.

B. Integration Designer – This is used for designing integration flows but does not provide real-time tracing of transactions.

C. Confluent Platform – This is a Kafka-based event streaming platform, not a transaction tracing tool in CP4I.

IBM Documentation – Operations Dashboard Overview

IBM Cloud Pak for Integration Monitoring and Tracing Guide

IBM MQ Tracing in Operations Dashboard

Key Features of the Operations Dashboard:Why Not the Other Options?IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References

What is the purpose of the Automation Assets Deployment capability?

Options:

It is a streaming platform that enables organization and management of data from many different sources.

It is a streaming platform that enables organization and management of data but only from a single source.

It allows the user to store, manage, retrieve, and search integration assets from within the Cloud Pak.

It allows the user to only store and manage integration assets from within the Cloud Pak

Answer:

CExplanation:

In IBM Cloud Pak for Integration (CP4I) v2021.2, the Automation Assets Deployment capability is designed to help users efficiently manage integration assets within the Cloud Pak environment. This capability provides a centralized repository where users can store, manage, retrieve, and search for integration assets that are essential for automation and integration processes.

Option A is incorrect: The Automation Assets Deployment feature is not a streaming platform for managing data from multiple sources. Streaming platforms, such as IBM Event Streams, are used for real-time data ingestion and processing.

Option B is incorrect: Similar to Option A, this feature does not focus on data streaming or management from a single source but rather on handling integration assets.

Option C is correct: The Automation Assets Deployment capability provides a comprehensive solution for storing, managing, retrieving, and searching integration-related assets within IBM Cloud Pak for Integration. It enables organizations to reuse and efficiently deploy integration components across different services.

Option D is incorrect: While this capability allows for storing and managing assets, it also provides retrieval and search functionality, making Option C the more accurate choice.

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

IBM Cloud Pak for Integration Documentation

IBM Cloud Pak for Integration Automation Assets Overview

IBM Knowledge Center – Managing Automation Assets

What are the two custom resources provided by IBM Licensing Operator?

Options:

IBM License Collector

IBM License Service Reporter

IBM License Viewer

IBM License Service

IBM License Reporting

Answer:

A, DExplanation:

The IBM Licensing Operator is responsible for managing and tracking IBM software license consumption in OpenShift and Kubernetes environments. It provides two key Custom Resources (CRs) to facilitate license tracking, reporting, and compliance in IBM Cloud Pak deployments:

IBM License Collector (IBMLicenseCollector)

This custom resource is responsible for collecting license usage data from IBM Cloud Pak components and aggregating the data for reporting.

It gathers information from various IBM products deployed within the cluster, ensuring that license consumption is tracked accurately.

IBM License Service (IBMLicenseService)

This custom resource provides real-time license tracking and metering for IBM software running in a containerized environment.

It is the core service that allows administrators to query and verify license usage.

The IBM License Service ensures compliance with IBM Cloud Pak licensing requirements and integrates with the IBM License Service Reporter for extended reporting capabilities.

B. IBM License Service Reporter – Incorrect

While IBM License Service Reporter exists as an additional reporting tool, it is not a custom resource provided directly by the IBM Licensing Operator. Instead, it is a component that enhances license reporting outside the cluster.

C. IBM License Viewer – Incorrect

No such CR exists. IBM License information can be viewed through OpenShift or CLI, but there is no "License Viewer" CR.

E. IBM License Reporting – Incorrect

While reporting is a function of IBM License Service, there is no custom resource named "IBM License Reporting."

Why the other options are incorrect:

IBM Licensing Service Documentation

IBM Cloud Pak Licensing Overview

OpenShift and IBM License Service Integration

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

After setting up OpenShift Logging an index pattern in Kibana must be created to retrieve logs for Cloud Pak for Integration (CP4I) applications. What is the correct index for CP4I applications?

Options:

cp4i-*

applications*

torn-*

app-*

Answer:

BExplanation:

When configuring OpenShift Logging with Kibana to retrieve logs for Cloud Pak for Integration (CP4I) applications, the correct index pattern to use is applications*.

Here’s why:

IBM Cloud Pak for Integration (CP4I) applications running on OpenShift generate logs that are stored in the Elasticsearch logging stack.

The standard OpenShift logging format organizes logs into different indices based on their source type.

The applications* index pattern is used to capture logs for applications deployed on OpenShift, including CP4I components.

Analysis of the options:

Option A (Incorrect – cp4i-*): There is no specific index pattern named cp4i-* for retrieving CP4I logs in OpenShift Logging.

*Option B (Correct – applications)**: This is the correct index pattern used in Kibana to retrieve logs from OpenShift applications, including CP4I components.

Option C (Incorrect – torn-*): This is not a valid OpenShift logging index pattern.

Option D (Incorrect – app-*): This index does not exist in OpenShift logging by default.

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

IBM Cloud Pak for Integration Logging Guide

OpenShift Logging Documentation

Kibana and Elasticsearch Index Patterns in OpenShift

What is the minimum number of Elasticsearch nodes required for a highly-available logging solution?

Options:

1

2

3

7

Answer:

CExplanation:

In IBM Cloud Pak for Integration (CP4I) v2021.2, which runs on Red Hat OpenShift, logging is handled using the OpenShift Logging Operator, which often utilizes Elasticsearch as the log storage backend.

For a highly available (HA) Elasticsearch cluster, the minimum number of nodes required is 3.

Elasticsearch uses a quorum-based system for cluster state management.

A minimum of three nodes ensures that the cluster can maintain a quorum in case one node fails.

HA requires at least two master-eligible nodes, and with three nodes, the system can elect a new master if the active one fails.

Replication across three nodes prevents data loss and improves fault tolerance.

Why Are 3 Elasticsearch Nodes Required for High Availability?Example Elasticsearch Deployment for HA:A standard HA Elasticsearch setup consists of:

3 master-eligible nodes (manage cluster state).

At least 2 data nodes (store logs and allow redundancy).

Optional client nodes (handle queries to offload work from data nodes).

Ensures HA by allowing Elasticsearch to withstand node failures without loss of cluster control.

Prevents split-brain scenarios, which occur when an even number of nodes (e.g., 2) cannot reach a quorum.

Recommended by IBM and Red Hat for OpenShift logging solutions.

Why Answer C (3) is Correct?

A. 1 → Incorrect

A single-node Elasticsearch deployment is not HA because if the node fails, all logs are lost.

B. 2 → Incorrect

Two nodes cannot form a quorum, meaning the cluster cannot elect a leader reliably.

This could lead to split-brain scenarios or a complete failure when one node goes down.

D. 7 → Incorrect

While a larger cluster (e.g., 7 nodes) improves scalability and performance, it is not the minimum requirement for HA.

Three nodes are sufficient for high availability.

Explanation of Incorrect Answers:

IBM Cloud Pak for Integration Logging and Monitoring

OpenShift Logging Operator - Elasticsearch Deployment

Elasticsearch High Availability Best Practices

IBM OpenShift Logging Solution Architecture

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

How many Cloud Pak for Integration licenses will the non-production environment cost as compared to the production environment when deploying API Connect. App Connect Enterprise, and MQ?

Options:

The same amount

Half as many.

More than half as many.

More information is needed to determine the cost.

Answer:

BExplanation:

IBM Cloud Pak for Integration (CP4I) licensing follows Virtual Processor Core (VPC)-based pricing, where licensing requirements differ between production and non-production environments.

For non-production environments, IBM typically requires half the number of VPC licenses compared to production environments when deploying components like API Connect, App Connect Enterprise, and IBM MQ.

This 50% reduction applies because IBM offers a non-production environment discount, which allows enterprises to use fewer VPCs for testing, development, and staging while still maintaining functionality.

IBM provides reduced VPC license requirements for non-production environments to lower costs.

The licensing ratio is generally 1:2 (Non-Production:Production), meaning the non-production environment will require half the licenses compared to production.

This policy is commonly applied to major CP4I components, including:

IBM API Connect

IBM App Connect Enterprise

IBM MQ

A. The same amount → Incorrect

Non-production environments typically require half the VPC licenses, not the same amount.

C. More than half as many → Incorrect

IBM’s standard licensing policy offers at least a 50% reduction, so this is not correct.

D. More information is needed to determine the cost. → Incorrect

While pricing details depend on contract terms, IBM has a standard non-production licensing policy, making it predictable.

Why Answer B is Correct?Explanation of Incorrect Answers:

IBM Cloud Pak for Integration Licensing Guide

IBM Cloud Pak VPC Licensing

IBM MQ Licensing Details

IBM API Connect Licensing

IBM App Connect Enterprise Licensing

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

Which statement is true about the Confluent Platform capability for the IBM Cloud Pak for Integration?

Options:

It provides the ability to trace transactions through IBM Cloud Pak for Integration.

It provides a capability that allows user to store, manage, and retrieve integration assets in IBM Cloud Pak for Integration.

It provides APIs to discover applications, platforms, and infrastructure in the environment.

It provides an event-streaming platform to organize and manage data from many different sources with one reliable, high performance system.

Answer:

DExplanation:

IBM Cloud Pak for Integration (CP4I) includes Confluent Platform as a key capability to support event-driven architecture and real-time data streaming. The Confluent Platform is built on Apache Kafka, providing robust event-streaming capabilities that allow organizations to collect, process, store, and manage data from multiple sources in a highly scalable and reliable manner.

This capability is essential for real-time analytics, event-driven microservices, and data integration between various applications and services. With its high-performance messaging backbone, it ensures low-latency event processing while maintaining fault tolerance and durability.

A. It provides the ability to trace transactions through IBM Cloud Pak for Integration.

Incorrect. Transaction tracing and monitoring are primarily handled by IBM Cloud Pak for Integration's API Connect, App Connect, and Instana monitoring tools, rather than Confluent Platform itself.

B. It provides a capability that allows users to store, manage, and retrieve integration assets in IBM Cloud Pak for Integration.

Incorrect. IBM Asset Repository and IBM API Connect are responsible for managing integration assets, not Confluent Platform.

C. It provides APIs to discover applications, platforms, and infrastructure in the environment.

Incorrect. This functionality is more aligned with IBM Instana, IBM Cloud Pak for Multicloud Management, or OpenShift Discovery APIs, rather than the event-streaming capabilities of Confluent Platform.

IBM Cloud Pak for Integration Documentation - Event Streams (Confluent Platform Integration)

IBM Cloud Docs

Confluent Platform Overview

Confluent Documentation

IBM Event Streams for IBM Cloud Pak for Integration

IBM Event Streams

Explanation of Other Options:References:

Which command shows the current cluster version and available updates?

Options:

update

adm upgrade

adm update

upgrade

Answer:

BExplanation:

In IBM Cloud Pak for Integration (CP4I) v2021.2, which runs on OpenShift, administrators often need to check the current cluster version and available updates before performing an upgrade.

The correct command to display the current OpenShift cluster version and check for available updates is:

oc adm upgrade

This command provides information about:

The current OpenShift cluster version.

Whether a newer version is available for upgrade.

The channel and upgrade path.

A. update – Incorrect

There is no oc update or update command in OpenShift CLI for checking cluster versions.

C. adm update – Incorrect

oc adm update is not a valid command in OpenShift. The correct subcommand is adm upgrade.

D. upgrade – Incorrect

oc upgrade is not a valid OpenShift CLI command. The correct syntax requires adm upgrade.

Why the other options are incorrect:

Example Output of oc adm upgrade:$ oc adm upgrade

Cluster version is 4.10.16

Updates available:

Version 4.11.0

Version 4.11.1

OpenShift Cluster Upgrade Documentation

IBM Cloud Pak for Integration OpenShift Upgrade Guide

Red Hat OpenShift CLI Reference

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

When Instantiating a new capability through the Platform Navigator, what must be done to see distributed tracing data?

Options:

Press the 'enable' button In the Operations Dashboard.

Add 'operationsDashboard: true' to the deployment YAML.

Run the oc register command against the capability.

Register the capability with the Operations Dashboard

Answer:

DExplanation:

In IBM Cloud Pak for Integration (CP4I) v2021.2, when instantiating a new capability via the Platform Navigator, distributed tracing data is not automatically available. To enable tracing and observability for a capability, it must be registered with the Operations Dashboard.

The Operations Dashboard in CP4I provides centralized observability, logging, and distributed tracing across integration components.

Capabilities such as IBM API Connect, App Connect, IBM MQ, and Event Streams need to be explicitly registered with the Operations Dashboard to collect and display tracing data.

Registration links the capability with the distributed tracing service, allowing telemetry data to be captured.

Why "Register the capability with the Operations Dashboard" is the correct answer?

Why the Other Options Are Incorrect?Option

Explanation

Correct?

A. Press the 'enable' button in the Operations Dashboard.

❌ Incorrect – There is no single 'enable' button that automatically registers capabilities. Manual registration is required.

❌

B. Add 'operationsDashboard: true' to the deployment YAML.

❌ Incorrect – This setting alone does not enable distributed tracing. The capability still needs to be registered with the Operations Dashboard.

❌

C. Run the oc register command against the capability.

❌ Incorrect – There is no oc register command in OpenShift or CP4I for registering capabilities with the Operations Dashboard.

❌

Final Answer:✅ D. Register the capability with the Operations Dashboard

IBM Cloud Pak for Integration - Operations Dashboard

Enabling Distributed Tracing in IBM CP4I

IBM CP4I - Observability and Monitoring

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

An administrator is installing Cloud Pak for Integration onto an OpenShift cluster that does not have access to the internet.

How do they provide their ibm-entitlement-key when mirroring images to a portable registry?

Options:

The administrator uses a cloudctl case command to configure credentials for registries which require authentication before mirroring the images.

The administrator sets the key with "export ENTITLEMENTKEY" and then uses the "cloudPakOfflmelnstaller -mirror-images" script to mirror the images

The administrator adds the entitlement-key to the properties file SHOME/.airgap/registries on the Bastion Host.

The ibm-entitlement-key is added as a docker-registry secret onto the OpenShift cluster.

Answer:

AExplanation:

When installing IBM Cloud Pak for Integration (CP4I) on an OpenShift cluster that lacks internet access, an air-gapped installation is required. This process involves mirroring container images from an IBM container registry to a portable registry that can be accessed by the disconnected OpenShift cluster.

To authenticate and mirror images, the administrator must:

Use the cloudctl case command to configure credentials, including the IBM entitlement key, before initiating the mirroring process.

Authenticate with the IBM Container Registry using the entitlement key.

Mirror the required images from IBM’s registry to a local registry that the disconnected OpenShift cluster can access.

B. export ENTITLEMENTKEY and cloudPakOfflineInstaller -mirror-images

The command cloudPakOfflineInstaller -mirror-images is not a valid IBM Cloud Pak installation step.

IBM requires the use of cloudctl case commands for air-gapped installations.

C. Adding the entitlement key to .airgap/registries

There is no documented requirement to store the entitlement key in SHOME/.airgap/registries.

IBM Cloud Pak for Integration does not use this file for authentication.

D. Adding the ibm-entitlement-key as a Docker secret

While secrets are used in OpenShift for image pulling, they are not directly involved in mirroring images for air-gapped installations.

The entitlement key is required at the mirroring step, not when deploying the images.

Why Other Options Are Incorrect:IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

IBM Documentation: Installing Cloud Pak for Integration in an Air-Gapped Environment

IBM Cloud Pak Entitlement Key and Image Mirroring

OpenShift Air-Gapped Installation Guide

Which OpenShift component is responsible for checking the OpenShift Update Service for valid updates?

Options:

Cluster Update Operator

Cluster Update Manager

Cluster Version Updater

Cluster Version Operator

Answer:

DExplanation:

The Cluster Version Operator (CVO) is responsible for checking the OpenShift Update Service (OSUS) for valid updates in an OpenShift cluster. It continuously monitors for available updates and ensures that the cluster components are updated according to the specified update policy.

Periodically checks the OpenShift Update Service (OSUS) for available updates.

Manages the ClusterVersion resource, which defines the current version and available updates.

Ensures that cluster operators are applied in the correct order.

Handles update rollouts and recovery in case of failures.

A. Cluster Update Operator – No such component exists in OpenShift.

B. Cluster Update Manager – This is not an OpenShift component. The update process is managed by CVO.

C. Cluster Version Updater – Incorrect term; the correct term is Cluster Version Operator (CVO).

IBM Documentation – OpenShift Cluster Version Operator

IBM Cloud Pak for Integration (CP4I) v2021.2 Knowledge Center

Red Hat OpenShift Documentation on Cluster Updates

Key Functions of the Cluster Version Operator (CVO):Why Not the Other Options?IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References.

Which diagnostic information must be gathered and provided to IBM Support for troubleshooting the Cloud Pak for Integration instance?

Options:

Standard OpenShift Container Platform logs.

Platform Navigator event logs.

Cloud Pak For Integration activity logs.

Integration tracing activity reports.

Answer:

AExplanation:

When troubleshooting an IBM Cloud Pak for Integration (CP4I) v2021.2 instance, IBM Support requires diagnostic data that provides insights into the system’s performance, errors, and failures. The most critical diagnostic information comes from the Standard OpenShift Container Platform logs because:

CP4I runs on OpenShift, and its components are deployed as Kubernetes pods, meaning logs from OpenShift provide essential insights into infrastructure-level and application-level issues.

The OpenShift logs include:

Pod logs (oc logs

Event logs (oc get events), which provide details about errors, scheduling issues, or failed deployments.

Node and system logs, which help diagnose resource exhaustion, networking issues, or storage failures.

B. Platform Navigator event logs → Incorrect

While Platform Navigator manages CP4I services, its event logs focus mainly on UI-related issues and do not provide deep troubleshooting data needed for IBM Support.

C. Cloud Pak For Integration activity logs → Incorrect

CP4I activity logs include component-specific logs but do not cover the underlying OpenShift platform or container-level issues, which are crucial for troubleshooting.

D. Integration tracing activity reports → Incorrect

Integration tracing focuses on tracking API and message flows but is not sufficient for diagnosing broader CP4I system failures or deployment issues.

Explanation of Incorrect Answers:

IBM Cloud Pak for Integration Troubleshooting Guide

OpenShift Log Collection for Support

IBM MustGather for Cloud Pak for Integration

Red Hat OpenShift Logging and Monitoring

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

What needs to be created to allow integration flows in App Connect Designer or App Connect Dashboard to invoke callable flows across a hybrid environment?

Options:

Switch server

Mapping assist

Integration agent

Kafka sync

Answer:

CExplanation:

In IBM App Connect, when integrating flows across a hybrid environment (a combination of cloud and on-premises systems), an Integration Agent is required to enable callable flows.

Callable flows allow one integration flow to invoke another flow that may be running in a different environment (on-premises or cloud).

The Integration Agent acts as a bridge between IBM App Connect Designer (cloud-based) or App Connect Dashboard and the on-premises resources.

It ensures secure and reliable communication between different environments.

Option A (Incorrect – Switch server): No such component is needed in App Connect for hybrid integrations.

Option B (Incorrect – Mapping assist): This is used for transformation support but does not enable cross-environment callable flows.

Option C (Correct – Integration agent): The Integration Agent is specifically designed to support callable flows across hybrid environments.

Option D (Incorrect – Kafka): While Kafka is useful for event-driven architectures, it is not required for invoking callable flows between App Connect instances.

Why is the Integration Agent needed?Analysis of the Options:IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

IBM App Connect Hybrid Integration Guide

Using Integration Agents for Callable Flows

IBM Cloud Pak for Integration Documentation

The OpenShift Logging Elasticsearch instance is optimized and tested for short term storage. Approximately how long will it store data for?

Options:

1 day

30 days

7 days

6 months

Answer:

CExplanation:

In IBM Cloud Pak for Integration (CP4I) v2021.2, OpenShift Logging utilizes Elasticsearch as its log storage backend. The default configuration of the OpenShift Logging stack is optimized for short-term storage and is designed to retain logs for approximately 7 days before they are automatically purged.

Performance Optimization: The OpenShift Logging Elasticsearch instance is designed for short-term log retention to balance storage efficiency and performance.

Default Curator Configuration: OpenShift Logging uses Elasticsearch Curator to manage the log retention policy, and by default, it is set to delete logs older than 7 days.

Designed for Operational Logs: The default OpenShift Logging stack is intended for short-term troubleshooting and monitoring, not long-term log archival.

Why is the retention period 7 days?If longer retention is required, organizations can:

Configure a different retention period by modifying the Elasticsearch Curator settings.

Forward logs to an external log storage system like Splunk, IBM Cloud Object Storage, or another long-term logging solution.

A. 1 day – Too short; OpenShift Logging does not delete logs on a daily basis by default.

B. 30 days – The default retention period is 7 days, not 30. A 30-day retention period would require manual configuration changes.

D. 6 months – OpenShift Logging is not optimized for such long-term storage. Long-term log retention should be managed using external storage solutions.

Why Other Options Are Incorrect:IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

IBM Cloud Pak for Integration Logging and Monitoring

Red Hat OpenShift Logging Documentation

Configuring OpenShift Logging Retention Policy

Which OpenShift component controls the placement of workloads on nodes for Cloud Pak for Integration deployments?

Options:

API Server

Controller Manager

Etcd

Scheduler

Answer:

DExplanation:

In IBM Cloud Pak for Integration (CP4I) v2021.2, which runs on Red Hat OpenShift, the component responsible for determining the placement of workloads (pods) on worker nodes is the Scheduler.

API Server (Option A): The API Server is the front-end of the OpenShift and Kubernetes control plane, handling REST API requests, authentication, and cluster state updates. However, it does not decide where workloads should be placed.

Controller Manager (Option B): The Controller Manager ensures the desired state of the system by managing controllers (e.g., ReplicationController, NodeController). It does not handle pod placement.

Etcd (Option C): Etcd is the distributed key-value store used by OpenShift and Kubernetes to store cluster state data. It plays no role in scheduling workloads.

Scheduler (Option D - Correct Answer): The Scheduler is responsible for selecting the most suitable node to run a newly created pod based on resource availability, affinity/anti-affinity rules, and other constraints.

When a new pod is created, it initially has no assigned node.

The Scheduler evaluates all worker nodes and assigns the pod to the most appropriate node, ensuring balanced resource utilization and policy compliance.

In CP4I, efficient workload placement is crucial for maintaining performance and resilience, and the Scheduler ensures that workloads are optimally distributed across the cluster.

Explanation of OpenShift Components:Why the Scheduler is Correct?IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

IBM CP4I Documentation – Deploying on OpenShift

Red Hat OpenShift Documentation – Understanding the Scheduler

Kubernetes Documentation – Scheduler

What team Is created as part of the Initial Installation ot Cloud Pak for In-tegration?

Options:

zen followed by a timestamp.

zen followed by a GUID.

zenteam followed by a timestamp.

zenteam followed by a GUID.

Answer:

DExplanation:

During the initial installation of IBM Cloud Pak for Integration (CP4I) v2021.2, a default team is automatically created to manage access control and user roles within the system. This team is named "zenteam", followed by a Globally Unique Identifier (GUID).

"zenteam" is the default team created as part of CP4I’s initial installation.

A GUID (Globally Unique Identifier) is appended to "zenteam" to ensure uniqueness across different installations.

This team is crucial for user and role management, as it provides access to various components of CP4I such as API management, messaging, and event streams.

The GUID ensures that multiple deployments within the same cluster do not conflict in terms of team naming.

IBM Cloud Pak for Integration Documentation

IBM Knowledge Center - User and Access Management

IBM CP4I Installation Guide

Key Points:IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

What is the minimum Red Hat OpenShift version for Cloud Pak for Integration V2021.2?

Options:

4.7.4

4.6.8

4.7.4

4.6.2

Answer:

AExplanation:

IBM Cloud Pak for Integration (CP4I) v2021.2 is designed to run on Red Hat OpenShift Container Platform (OCP). Each version of CP4I has a minimum required OpenShift version to ensure compatibility, performance, and security.

For Cloud Pak for Integration v2021.2, the minimum required OpenShift version is 4.7.4.

Compatibility: CP4I components, including IBM MQ, API Connect, App Connect, and Event Streams, require specific OpenShift versions to function properly.

Security & Stability: Newer OpenShift versions include critical security updates and performance improvements essential for enterprise deployments.

Operator Lifecycle Management (OLM): CP4I uses OpenShift Operators, and the correct OpenShift version ensures proper installation and lifecycle management.

Minimum required OpenShift version: 4.7.4

Recommended OpenShift version: 4.8 or later

Key Considerations for OpenShift Version Requirements:IBM’s Official Minimum OpenShift Version Requirements for CP4I v2021.2:

IBM officially requires at least OpenShift 4.7.4 for deploying CP4I v2021.2.

OpenShift 4.6.x versions are not supported for CP4I v2021.2.

OpenShift 4.7.4 is the first fully supported version that meets IBM's compatibility requirements.

Why Answer A (4.7.4) is Correct?

B. 4.6.8 → Incorrect

OpenShift 4.6.x is not supported for CP4I v2021.2.

IBM Cloud Pak for Integration v2021.1 supported OpenShift 4.6, but v2021.2 requires 4.7.4 or later.

C. 4.7.4 → Correct

This is the minimum required OpenShift version for CP4I v2021.2.

D. 4.6.2 → Incorrect

OpenShift 4.6.2 is outdated and does not meet the minimum version requirement for CP4I v2021.2.

Explanation of Incorrect Answers:

IBM Cloud Pak for Integration v2021.2 System Requirements

Red Hat OpenShift Version Support Matrix

IBM Cloud Pak for Integration OpenShift Deployment Guide

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

Which two authentication types are supported for single sign-on in Founda-tional Services?

Options:

Basic Authentication

OpenShift authentication

PublicKey

Enterprise SAML

Local User Registry

Answer:

B, DExplanation:

In IBM Cloud Pak for Integration (CP4I) v2021.2, Foundational Services provide authentication and access control mechanisms, including Single Sign-On (SSO) integration. The two supported authentication types for SSO are:

OpenShift Authentication

IBM Cloud Pak for Integration leverages OpenShift authentication to integrate with existing identity providers.

OpenShift authentication supports OAuth-based authentication, allowing users to sign in using an OpenShift identity provider, such as LDAP, OIDC, or SAML.

This method enables seamless user access without requiring additional login credentials.

Enterprise SAML (Security Assertion Markup Language)

SAML authentication allows integration with enterprise identity providers (IdPs) such as IBM Security Verify, Okta, Microsoft Active Directory Federation Services (ADFS), and other SAML 2.0-compatible IdPs.

It provides federated identity management for SSO across enterprise applications, ensuring secure access to Cloud Pak services.

A. Basic Authentication – Incorrect

Basic authentication (username and password) is not used for Single Sign-On (SSO). SSO mechanisms require identity federation through OpenID Connect (OIDC) or SAML.

C. PublicKey – Incorrect

PublicKey authentication (such as SSH key-based authentication) is used for system-level access, not for SSO in Foundational Services.

E. Local User Registry – Incorrect

While local user registries can store credentials, they do not provide SSO capabilities. SSO requires federated identity providers like OpenShift authentication or SAML-based IdPs.

Why the other options are incorrect:

IBM Cloud Pak Foundational Services Authentication Guide

OpenShift Authentication and Identity Providers

IBM Cloud Pak for Integration SSO Configuration

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

The installation of a Cloud Pak for Integration operator is hanging and the administrator needs to debug it. Which objects on the OpenShift cluster should be checked first?

Options:

The Log Aggregator in the openshift-operators namespace and then the subscription.

The InstallPlan and the pod logs from the hanging operator.

The operator's Subscription. InstallPlan. and ClusterServiceVersion.

The ibm-operator-catalog CatalogSource and the ClusterServiceVersion.

Answer:

CExplanation:

When installing an IBM Cloud Pak for Integration (CP4I) operator in an OpenShift cluster, issues like hanging installations often occur due to problems with the Operator Lifecycle Manager (OLM). The best approach for debugging a stalled installation is to systematically check the key Operator OLM objects in the following order:

Subscription:

The Subscription (Subscription CR) manages the operator installation and upgrades.

Check if the subscription is in the correct state (kubectl get subscription -n

Look for events that might indicate why the operator is stuck.

InstallPlan:

The InstallPlan determines the installation process for the operator.

If an InstallPlan is pending, it might indicate that dependencies or permissions are missing.

Use kubectl get installplan -n

ClusterServiceVersion (CSV):

The ClusterServiceVersion (CSV) represents the installed operator and its status.

If the CSV is in a "Pending" or "Failed" state, logs will provide insights into what is wrong.

Use kubectl get csv -n

Option A (Log Aggregator in openshift-operators and then Subscription) – Incorrect:

While logging is useful, the first step should be checking the OLM objects like Subscription, InstallPlan, and CSV.

Option B (InstallPlan and pod logs from the hanging operator) – Incorrect:

The InstallPlan is useful, but checking Subscription and CSV as well is necessary for a complete diagnosis.

Checking pod logs is a later step, not the first thing to do.

Option D (ibm-operator-catalog CatalogSource and ClusterServiceVersion) – Incorrect:

The CatalogSource is used to fetch the operator package, but it is not the first thing to check.

Checking Subscription, InstallPlan, and CSV first provides a more direct way to diagnose the issue.

IBM Cloud Pak for Integration Operator Troubleshooting

OpenShift Operator Lifecycle Manager (OLM) Guide

Debugging Operator Installation Issues in OpenShift

Why the other options are incorrect:IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

Which storage type is supported with the App Connect Enterprise (ACE) Dash-board instance?

Options:

Ephemeral storage

Flash storage

File storage

Raw block storage

Answer:

CExplanation:

In IBM Cloud Pak for Integration (CP4I) v2021.2, App Connect Enterprise (ACE) Dashboard requires persistent storage to maintain configurations, logs, and runtime data. The supported storage type for the ACE Dashboard instance is file storage because:

It supports ReadWriteMany (RWX) access mode, allowing multiple pods to access shared data.

It ensures data persistence across restarts and upgrades, which is essential for managing ACE integrations.

It is compatible with NFS, IBM Spectrum Scale, and OpenShift Container Storage (OCS), all of which provide file system-based storage.

A. Ephemeral storage – Incorrect

Ephemeral storage is temporary and data is lost when the pod restarts or gets rescheduled.

ACE Dashboard needs persistent storage to retain configuration and logs.

B. Flash storage – Incorrect

Flash storage refers to SSD-based storage and is not specifically required for the ACE Dashboard.

While flash storage can be used for better performance, ACE requires file-based persistence, which is different from flash storage.

D. Raw block storage – Incorrect

Block storage is low-level storage that is used for databases and applications requiring high-performance IOPS.

ACE Dashboard needs a shared file system, which block storage does not provide.

Why the other options are incorrect:

IBM App Connect Enterprise (ACE) Storage Requirements

IBM Cloud Pak for Integration Persistent Storage Guide

OpenShift Persistent Volume Types

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

What is one way to obtain the OAuth secret and register a workload to Identity and Access Management?

Options:

Extracting the ibm-entitlement-key secret.

Through the Red Hat Marketplace.

Using a Custom Resource Definition (CRD) file.

Using the OperandConfig API file

Answer:

DExplanation:

In IBM Cloud Pak for Integration (CP4I) v2021.2, workloads requiring authentication with Identity and Access Management (IAM) need an OAuth secret for secure access. One way to obtain this secret and register a workload is through the OperandConfig API file.

OperandConfig API is used in Cloud Pak for Integration to configure operands (software components).

It provides a mechanism to retrieve secrets, including the OAuth secret necessary for authentication with IBM IAM.

The OAuth secret is stored in a Kubernetes secret, and OperandConfig API helps configure and retrieve it dynamically for a registered workload.

Why Option D is Correct:

A. Extracting the ibm-entitlement-key secret. → Incorrect

The ibm-entitlement-key is used for entitlement verification when pulling IBM container images from IBM Container Registry.

It is not related to OAuth authentication or IAM registration.

B. Through the Red Hat Marketplace. → Incorrect

The Red Hat Marketplace is for purchasing and deploying OpenShift-based applications but does not provide OAuth secrets for IAM authentication in Cloud Pak for Integration.

C. Using a Custom Resource Definition (CRD) file. → Incorrect

CRDs define Kubernetes API extensions, but they do not directly handle OAuth secret retrieval for IAM registration.

The OperandConfig API is specifically designed for managing operand configurations, including authentication details.

Explanation of Incorrect Answers:

IBM Cloud Pak for Integration Identity and Access Management

IBM OperandConfig API Documentation

IBM Cloud Pak for Integration Security Configuration

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

How can a new API Connect capability be installed in an air-gapped environ-ment?

Options:

Configure a laptop or bastion host to use Container Application Software for Enterprises files to mirror images.

An OVA form-factor of the Cloud Pak for Integration is recommended for high security deployments.

A pass-through route must be configured in the OpenShift Container Platform to connect to the online image registry.

Use secure FTP to mirror software images in the OpenShift Container Platform cluster nodes.

Answer:

AExplanation:

In an air-gapped environment, the OpenShift cluster does not have direct internet access, which means that new software images, such as IBM API Connect, must be manually mirrored from an external source.

The correct approach for installing a new API Connect capability in an air-gapped OpenShift environment is to:

Use a laptop or a bastion host that does have internet access to pull required container images from IBM’s entitled software registry.

Leverage Container Application Software for Enterprises (CASE) files to download and transfer images to the private OpenShift registry.

Mirror images into the OpenShift cluster by using OpenShift’s built-in image mirror utilities (oc mirror).

This method ensures that all required container images are available locally within the air-gapped environment.

Why the Other Options Are Incorrect?Option

Explanation

Correct?

B. An OVA form-factor of the Cloud Pak for Integration is recommended for high-security deployments.

❌ Incorrect – IBM Cloud Pak for Integration does not provide an OVA (Open Virtual Appliance) format for API Connect deployments. It is containerized and runs on OpenShift.

❌

C. A pass-through route must be configured in the OpenShift Container Platform to connect to the online image registry.

❌ Incorrect – Air-gapped environments have no internet connectivity, so this approach would not work.

❌

D. Use secure FTP to mirror software images in the OpenShift Container Platform cluster nodes.

❌ Incorrect – OpenShift does not use FTP for image mirroring; it relies on oc mirror and image registries for air-gapped deployments.

❌

Final Answer:✅ A. Configure a laptop or bastion host to use Container Application Software for Enterprises files to mirror images.

IBM API Connect Air-Gapped Installation Guide

IBM Container Application Software for Enterprises (CASE) Documentation

Red Hat OpenShift - Mirroring Images for Disconnected Environments

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

When considering storage for a highly available single-resilient queue manager, which statement is true?

Options:

A shared file system must be used that provides data write integrity, granting exclusive access to file and release locks on failure.

To tolerate an outage of an entire availability zone, cloud storage which replicates across two other zones must be used.

Persistent volumes are not supported for a resilient queue manager.

A single resilient queue manager takes much longer to recover than a multi instance queue manager

Answer:

AExplanation:

In IBM Cloud Pak for Integration (CP4I) v2021.2, when deploying a highly available single-resilient queue manager, storage considerations are crucial to ensuring fault tolerance and failover capability.

A single-resilient queue manager uses a shared file system that allows different queue manager instances to access the same data, enabling failover to another node in the event of failure. The key requirement is data write integrity, ensuring that only one instance has access at a time and that locks are properly released in case of a node failure.

Option A is correct: A shared file system must support data consistency and failover mechanisms to ensure that only one instance writes to the queue manager logs and data at any time. If the active instance fails, another instance can take over using the same storage.

Option B is incorrect: While cloud storage replication across availability zones is useful, it does not replace the need for a proper shared file system with write integrity.

Option C is incorrect: Persistent volumes are supported for resilient queue managers when deployed in Kubernetes environments like OpenShift, as long as they meet the required file system properties.

Option D is incorrect: A single resilient queue manager can recover quickly by failing over to a standby node, often faster than a multi-instance queue manager, which requires additional failover processes.

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

IBM MQ High Availability Documentation

IBM Cloud Pak for Integration Storage Considerations

IBM MQ Resiliency and Disaster Recovery Guide

Which two App Connect resources enable callable flows to be processed between an integration solution in a cluster and an integration server in an on-premise system?

Options:

Sync server

Connectivity agent

Kafka sync

Switch server

Routing agent

Answer:

B, EExplanation:

In IBM App Connect, which is part of IBM Cloud Pak for Integration (CP4I), callable flows enable integration between different environments, including on-premises systems and cloud-based integration solutions deployed in an OpenShift cluster.

To facilitate this connectivity, two critical resources are used:

The Connectivity Agent acts as a bridge between cloud-hosted App Connect instances and on-premises integration servers.

It enables secure bidirectional communication by allowing callable flows to connect between cloud-based and on-premise integration servers.

This is essential for hybrid cloud integrations, where some components remain on-premises for security or compliance reasons.

The Routing Agent directs incoming callable flow requests to the appropriate App Connect integration server based on configured routing rules.

It ensures low-latency and efficient message routing between cloud and on-premise systems, making it a key component for hybrid integrations.

1. Connectivity Agent (✅ Correct Answer)2. Routing Agent (✅ Correct Answer)

Why the Other Options Are Incorrect?Option

Explanation

Correct?

A. Sync server

❌ Incorrect – There is no "Sync Server" component in IBM App Connect. Synchronization happens through callable flows, but not via a "Sync Server".

❌

C. Kafka sync

❌ Incorrect – Kafka is used for event-driven messaging, but it is not required for callable flows between cloud and on-premises environments.

❌

D. Switch server

❌ Incorrect – No such component called "Switch Server" exists in App Connect.

❌

Final Answer:✅ B. Connectivity agent✅ E. Routing agent

IBM App Connect - Callable Flows Documentation

IBM Cloud Pak for Integration - Hybrid Connectivity with Connectivity Agents

IBM App Connect Enterprise - On-Premise and Cloud Integration

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

An administrator is using the Storage Suite for Cloud Paks entitlement that they received with their Cloud Pak for Integration (CP4I) licenses. The administrator has 200 VPC of CP4I and wants to be licensed to use 8TB of OpenShift Container Storage for 3 years. They have not used or allocated any of their Storage Suite entitlement so far.

What actions must be taken with their Storage Suite entitlement?

Options:

The Storage Suite entitlement covers the administrator's license needs only if the OpenShift cluster is running on IBM Cloud or AWS.

The Storage Suite entitlement can be used for OCS. however 8TB will require 320 VPCs of CP41

The Storage Suite entitlement already covers the administrator's license needs.

The Storage Suite entitlement only covers IBM Spectrum Scale, Spectrum Virtualize. Spectrum Discover, and Spectrum Protect Plus products, but the licenses can be converted to OCS.

Answer:

BExplanation:

The IBM Storage Suite for Cloud Paks provides storage licensing for various IBM Cloud Pak solutions, including Cloud Pak for Integration (CP4I). It supports multiple storage options, such as IBM Spectrum Scale, IBM Spectrum Virtualize, IBM Spectrum Discover, IBM Spectrum Protect Plus, and OpenShift Container Storage (OCS).

IBM licenses CP4I based on Virtual Processor Cores (VPCs).

Storage Suite for Cloud Paks uses a conversion factor:

1 VPC of CP4I provides 25GB of OCS storage entitlement.

To calculate how much CP4I VPC is required for 8TB (8000GB) of OCS:

Understanding Licensing Conversion:8000GB25GB per VPC=320 VPCs\frac{8000GB}{25GB \text{ per VPC}} = 320 \text{ VPCs}25GB per VPC8000GB=320 VPCs

Since the administrator only has 200 VPCs of CP4I, they do not have enough entitlement to cover the full 8TB of OCS storage. They would need an additional 120 VPCs to fully meet the requirement.

A. The Storage Suite entitlement covers the administrator's license needs only if the OpenShift cluster is running on IBM Cloud or AWS.

Incorrect, because Storage Suite for Cloud Paks can be used on any OpenShift deployment, including on-premises, IBM Cloud, AWS, or other cloud providers.

C. The Storage Suite entitlement already covers the administrator's license needs.

Incorrect, because 200 VPCs of CP4I only provide 5TB (200 × 25GB) of OCS storage, but the administrator needs 8TB.

D. The Storage Suite entitlement only covers IBM Spectrum products, but the licenses can be converted to OCS.

Incorrect, because Storage Suite already includes OpenShift Container Storage (OCS) as part of its licensing model without requiring any conversion.

Why Other Options Are Incorrect:IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

IBM Storage Suite for Cloud Paks Licensing Guide

IBM Cloud Pak for Integration Licensing Information

OpenShift Container Storage Entitlement

What is the result of issuing the following command?

oc get packagemanifest -n ibm-common-services ibm-common-service-operator -o*jsonpath='{.status.channels![*].name}'

Options:

It lists available upgrade channels for Cloud Pak for Integration Foundational Services.

It displays the status and names of channels in the default queue manager.

It retrieves a manifest of services packaged in Cloud Pak for Integration operators.

It returns an operator package manifest in a JSON structure.

Answer:

AExplanation:

jsonpath='{.status.channels[*].name}'

performs the following actions:

oc get packagemanifest → Retrieves the package manifest information for operators installed on the OpenShift cluster.

-n ibm-common-services → Specifies the namespace where IBM Common Services are installed.

ibm-common-service-operator → Targets the IBM Common Service Operator, which manages foundational services for Cloud Pak for Integration.

-o jsonpath='{.status.channels[*].name}' → Extracts and displays the available upgrade channels from the operator’s status field in JSON format.

The IBM Common Service Operator is part of Cloud Pak for Integration Foundational Services.

The status.channels[*].name field lists the available upgrade channels (e.g., stable, v1, latest).

This command helps administrators determine which upgrade paths are available for foundational services.

Why Answer A is Correct:

B. It displays the status and names of channels in the default queue manager. → Incorrect

This command is not related to IBM MQ queue managers.

It queries package manifests for IBM Common Services operators, not queue managers.

C. It retrieves a manifest of services packaged in Cloud Pak for Integration operators. → Incorrect

The command does not return a full list of services; it only displays upgrade channels.

D. It returns an operator package manifest in a JSON structure. → Incorrect

The command outputs only the names of upgrade channels in plain text, not the full JSON structure of the package manifest.

Explanation of Incorrect Answers:

IBM Cloud Pak Foundational Services Overview

OpenShift PackageManifest Command Documentation

IBM Common Service Operator Details

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

Which statement is true regarding tracing in Cloud Pak for Integration?

Options:

If tracing has not been enabled, the administrator can turn it on without the need to redeploy the integration capability.

Distributed tracing data is enabled by default when a new capability is in-stantiated through the Platform Navigator.

The administrator can schedule tracing to run intermittently for each speci-fied integration capability.

Tracing for an integration capability instance can be enabled only when de-ploying the instance.

Answer:

DExplanation:

In IBM Cloud Pak for Integration (CP4I), distributed tracing allows administrators to monitor the flow of requests across multiple services. This feature helps in diagnosing performance issues and debugging integration flows.

Tracing must be enabled during the initial deployment of an integration capability instance.

Once deployed, tracing settings cannot be changed dynamically without redeploying the instance.

This ensures that tracing configurations are properly set up and integrated with observability tools like OpenTelemetry, Jaeger, or Zipkin.

A. If tracing has not been enabled, the administrator can turn it on without the need to redeploy the integration capability. (Incorrect)

Tracing cannot be enabled after deployment. It must be configured during the initial deployment process.

B. Distributed tracing data is enabled by default when a new capability is instantiated through the Platform Navigator. (Incorrect)

Tracing is not enabled by default. The administrator must manually enable it during deployment.

C. The administrator can schedule tracing to run intermittently for each specified integration capability. (Incorrect)

There is no scheduling option for tracing in CP4I. Once enabled, tracing runs continuously based on the chosen settings.

D. Tracing for an integration capability instance can be enabled only when deploying the instance. (Correct)

This is the correct answer. Tracing settings are defined at deployment and cannot be modified afterward without redeploying the instance.

Analysis of the Options:

IBM Cloud Pak for Integration - Tracing and Monitoring

Enabling Distributed Tracing in IBM CP4I

IBM OpenTelemetry and Jaeger Tracing Integration

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References: