Cloud Associate (JNCIA-Cloud) Questions and Answers

Your organization has legacy virtual machine workloads that need to be managed within a Kubernetes deployment.

Which Kubernetes add-on would be used to satisfy this requirement?

Options:

ADOT

Canal

KubeVirt

Romana

Answer:

CExplanation:

Kubernetes is designed primarily for managing containerized workloads, but it can also support legacy virtual machine (VM) workloads through specific add-ons. Let’s analyze each option:

A. ADOT

Incorrect: The AWS Distro for OpenTelemetry (ADOT) is a tool for collecting and exporting telemetry data (metrics, logs, traces). It is unrelated to running VMs in Kubernetes.

B. Canal

Incorrect: Canal is a networking solution that combines Flannel and Calico to provide overlay networking and network policy enforcement in Kubernetes. It does not support VM workloads.

C. KubeVirt

Correct: KubeVirt is a Kubernetes add-on that enables the management of virtual machines alongside containers in a Kubernetes cluster. It allows organizations to run legacy VM workloads while leveraging Kubernetes for orchestration.

D. Romana

Incorrect: Romana is a network policy engine for Kubernetes that provides security and segmentation. It does not support VM workloads.

Why KubeVirt?

VM Support in Kubernetes: KubeVirt extends Kubernetes to manage both containers and VMs, enabling organizations to transition legacy workloads to a Kubernetes environment.

Unified Orchestration: By integrating VMs into Kubernetes, KubeVirt simplifies the management of hybrid workloads.

JNCIA Cloud References:

The JNCIA-Cloud certification covers Kubernetes extensions like KubeVirt as part of its curriculum on cloud-native architectures. Understanding how to integrate legacy workloads into Kubernetes is essential for modernizing IT infrastructure.

For example, Juniper Contrail integrates with Kubernetes and KubeVirt to provide networking and security for hybrid workloads.

You just uploaded a qcow2 image of a vSRX virtual machine in OpenStack.

In this scenario, which service stores the virtual machine (VM) image?

Options:

Glance

Ironic

Neutron

Nova

Answer:

AExplanation:

OpenStack provides various services to manage cloud infrastructure resources, including virtual machine (VM) images. Let’s analyze each option:

A. Glance

Correct: Glanceis the OpenStack service responsible for managing and storing VM images. It provides a repository for uploading, discovering, and retrieving images in various formats, such as qcow2, raw, or ISO.

B. Ironic

Incorrect: Ironicis the OpenStack bare-metal provisioning service. It is used to manage physical servers, not VM images.

C. Neutron

Incorrect: Neutronis the OpenStack networking service that manages virtual networks, routers, and IP addresses. It does not store VM images.

D. Nova

Incorrect: Novais the OpenStack compute service that manages the lifecycle of virtual machines. While Nova interacts with Glance to retrieve VM images for deployment, it does not store the images itself.

Why Glance?

Image Repository:Glance acts as the central repository for VM images, enabling users to upload, share, and deploy images across the OpenStack environment.

Integration with Nova:When deploying a VM, Nova retrieves the required image from Glance to create the instance.

JNCIA Cloud References:

The JNCIA-Cloud certification covers OpenStack services, including Glance, as part of its cloud infrastructure curriculum. Understanding Glance’s role in image management is essential for deploying and managing virtual machines in OpenStack.

For example, Juniper Contrail integrates with OpenStack Glance to provide advanced networking features for VM images stored in the repository.

Which two statements are correct about the Kubernetes networking model? (Choose two.)

Options:

Pods are allowed to communicate if they are only in the default namespaces.

Pods are not allowed to communicate if they are in different namespaces.

Full communication between pods is allowed across nodes without requiring NAT.

Each pod has its own IP address in a flat, shared networking namespace.

Answer:

C, DExplanation:

Kubernetes networking is designed to provide seamless communication between pods, regardless of their location in the cluster. Let’s analyze each statement:

A. Pods are allowed to communicate if they are only in the default namespaces.

Incorrect:Pods can communicate with each other regardless of the namespace they belong to. Namespaces are used for logical grouping and isolation but do not restrict inter-pod communication.

B. Pods are not allowed to communicate if they are in different namespaces.

Incorrect:Pods in different namespaces can communicate with each other as long as there are no network policies restricting such communication. Namespaces do not inherently block communication.

C. Full communication between pods is allowed across nodes without requiring NAT.

Correct:Kubernetes networking is designed so that pods can communicate directly with each other across nodes without Network Address Translation (NAT). Each pod has a unique IP address, and the underlying network ensures direct communication.

D. Each pod has its own IP address in a flat, shared networking namespace.

Correct:In Kubernetes, each pod is assigned a unique IP address in a flat network space. This allows pods to communicate with each other as if they were on the same network, regardless of the node they are running on.

Why These Statements?

Flat Networking Model:Kubernetes uses a flat networking model where each pod gets its own IP address, simplifying communication and eliminating the need for NAT.

Cross-Node Communication:The design ensures that pods can communicate seamlessly across nodes, enabling scalable and distributed applications.

JNCIA Cloud References:

The JNCIA-Cloud certification emphasizes Kubernetes networking concepts, including pod-to-pod communication and the flat networking model. Understanding these principles is essential for designing and managing Kubernetes clusters.

For example, Juniper Contrail provides advanced networking features for Kubernetes, ensuring efficient and secure pod communication across nodes.

Which component of a software-defined networking (SDN) controller defines where data packets are forwarded by a network device?

Options:

the operational plane

the forwarding plane

the management plane

the control plane

Answer:

DExplanation:

Software-Defined Networking (SDN) separates the control plane from the data (forwarding) plane, enabling centralized control and programmability of network devices. Let’s analyze each option:

A. the operational plane

Incorrect:The operational plane is not a standard term in SDN architecture. It may refer to monitoring or management tasks but does not define packet forwarding behavior.

B. the forwarding plane

Incorrect:The forwarding plane (also known as the data plane) is responsible for forwarding packets based on rules provided by the control plane. It does not define where packets are forwarded; it simply executes the instructions.

C. the management plane

Incorrect:The management plane handles device configuration, monitoring, and administrative tasks. It does not determine packet forwarding paths.

D. the control plane

Correct:The control plane is responsible for making decisions about where data packets are forwarded. In SDN, the control plane is centralized in the SDN controller, which calculates forwarding paths and communicates them to network devices via protocols like OpenFlow.

Why the Control Plane?

Centralized Decision-Making:The control plane determines the optimal paths for packet forwarding and updates the forwarding plane accordingly.

Programmability:SDN controllers allow administrators to programmatically define forwarding rules, enabling dynamic and flexible network configurations.

JNCIA Cloud References:

The JNCIA-Cloud certification emphasizes understanding SDN architecture and its components. The separation of the control plane and forwarding plane is a foundational concept in SDN, enabling scalable and programmable networks.

For example, Juniper Contrail serves as an SDN controller, centralizing control over network devices and enabling advanced features like network automation and segmentation.

Which OpenStack object enables multitenancy?

Options:

role

flavor

image

project

Answer:

DExplanation:

Multitenancy is a key feature of OpenStack, enabling multiple users or organizations to share cloud resources while maintaining isolation. Let’s analyze each option:

A. role

Incorrect:Aroledefines permissions and access levels for users within a project. While roles are important for managing user privileges, they do not directly enable multitenancy.

B. flavor

Incorrect:Aflavorspecifies the compute, memory, and storage capacity of a VM instance. It is unrelated to enabling multitenancy.

C. image

Incorrect:Animageis a template used to create VM instances. While images are essential for deploying VMs, they do not enable multitenancy.

D. project

Correct:Aproject(also known as a tenant) is the primary mechanism for enabling multitenancy in OpenStack. Each project represents an isolated environment where resources (e.g., VMs, networks, storage) are provisioned and managed independently.

Why Project?

Isolation:Projects ensure that resources allocated to one tenant are isolated from others, enabling secure and efficient resource sharing.

Resource Management:Each project has its own quotas, users, and resources, making it the foundation of multitenancy in OpenStack.

JNCIA Cloud References:

The JNCIA-Cloud certification emphasizes understanding OpenStack’s multitenancy model, including the role of projects. Recognizing how projects enable resource isolation is essential for managing shared cloud environments.

For example, Juniper Contrail integrates with OpenStack Keystone to enforce multitenancy and network segmentation for projects.

Which two consoles are provided by the OpenShift Web UI? (Choose two.)

Options:

administrator console

developer console

operational console

management console

Answer:

A, BExplanation:

OpenShift provides a web-based user interface (Web UI) that offers two distinct consoles tailored to different user roles. Let’s analyze each option:

A. administrator console

Correct:

Theadministrator consoleis designed for cluster administrators. It provides tools for managing cluster resources, configuring infrastructure, monitoring performance, and enforcing security policies.

B. developer console

Correct:

Thedeveloper consoleis designed for application developers. It focuses on building, deploying, and managing applications, including creating projects, defining pipelines, and monitoring application health.

C. operational console

Incorrect:

There is no "operational console" in OpenShift. This term does not correspond to any official OpenShift Web UI component.

D. management console

Incorrect:

While "management console" might sound generic, OpenShift specifically refers to the administrator console for management tasks. This term is not officially used in the OpenShift Web UI.

Why These Consoles?

Administrator Console:Provides a centralized interface for managing the cluster's infrastructure and ensuring smooth operation.

Developer Console:Empowers developers to focus on application development without needing to interact with low-level infrastructure details.

JNCIA Cloud References:

The JNCIA-Cloud certification emphasizes understanding OpenShift's Web UI and its role in cluster management and application development. Recognizing the differences between the administrator and developer consoles is essential for effective collaboration in OpenShift environments.

For example, Juniper Contrail integrates with OpenShift to provide advanced networking features, leveraging both consoles for seamless operation.

You are asked to deploy a cloud solution for a customer that requires strict control over their resources and data. The deployment must allow the customer to implement and manage precise security controls to protect their data.

Which cloud deployment model should be used in this situation?

Options:

private cloud

hybrid cloud

dynamic cloud

public cloud

Answer:

AExplanation:

Cloud deployment models define how cloud resources are provisioned and managed. The four main models are:

Public Cloud:Resources are shared among multiple organizations and managed by a third-party provider. Examples include AWS, Microsoft Azure, and Google Cloud Platform.

Private Cloud:Resources are dedicated to a single organization and can be hosted on-premises or by a third-party provider. Private clouds offer greater control over security, compliance, and resource allocation.

Hybrid Cloud:Combines public and private clouds, allowing data and applications to move between them. This model provides flexibility and optimization of resources.

Dynamic Cloud:Not a standard cloud deployment model. It may refer to the dynamic scaling capabilities of cloud environments but is not a recognized category.

In this scenario, the customer requires strict control over their resources and data, as well as the ability to implement and manage precise security controls. Aprivate cloudis the most suitable deployment model because:

Dedicated Resources:The infrastructure is exclusively used by the organization, ensuring isolation and control.

Customizable Security:The organization can implement its own security policies, encryption mechanisms, and compliance standards.

On-Premises Option:If hosted internally, the organization retains full physical control over the data center and hardware.

Why Not Other Options?

Public Cloud:Shared infrastructure means less control over security and compliance. While public clouds offer robust security features, they may not meet the strict requirements of the customer.

Hybrid Cloud:While hybrid clouds combine the benefits of public and private clouds, they introduce complexity and may not provide the level of control the customer desires.

Dynamic Cloud:Not a valid deployment model.

JNCIA Cloud References:

The JNCIA-Cloud certification covers cloud deployment models and their use cases. Private clouds are highlighted as ideal for organizations with stringent security and compliance requirements, such as financial institutions, healthcare providers, and government agencies.

For example, Juniper Contrail supports private cloud deployments by providing advanced networking and security features, enabling organizations to build and manage secure, isolated cloud environments.

Which component of Kubernetes runs on all nodes and ensures that the containers are running in a pod?

Options:

kubelet

kube-proxy

container runtime

kube controller

Answer:

AExplanation:

Kubernetes components work together to ensure the proper functioning of the cluster and its workloads. Let’s analyze each option:

A. kubelet

Correct:

Thekubeletis a critical Kubernetes component that runs on every node in the cluster. It is responsible for ensuring that containers are running in pods as expected. The kubelet communicates with the container runtime to start, stop, and monitor containers based on the pod specifications provided by the control plane.

B. kube-proxy

Incorrect:

Thekube-proxyis a network proxy that runs on each node and manages network communication for services and pods. It ensures proper load balancing and routing of traffic but does not directly manage the state of containers or pods.

C. container runtime

Incorrect:

Thecontainer runtime(e.g.,containerd,cri-o) is responsible for running containers on the node. While it executes the containers, it does not ensure that the containers are running as part of a pod. This responsibility lies with the kubelet.

D. kube controller

Incorrect:

Thekube controlleris part of the control plane and ensures that the desired state of the cluster (e.g., number of replicas) is maintained. It does not run on all nodes and does not directly manage the state of containers in pods.

Why kubelet?

Pod Lifecycle Management:The kubelet ensures that the containers specified in a pod's definition are running and healthy. If a container crashes, the kubelet restarts it.

Node-Level Agent:The kubelet acts as the primary node agent, interfacing with the container runtime and the Kubernetes API server to manage pod lifecycle operations.

JNCIA Cloud References:

The JNCIA-Cloud certification covers Kubernetes architecture, including the role of the kubelet. Understanding how the kubelet works is essential for managing the health and operation of pods in Kubernetes clusters.

For example, Juniper Contrail integrates with Kubernetes to provide advanced networking features, relying on the kubelet to manage pod lifecycle events effectively.

Which Docker component builds, runs, and distributes Docker containers?

Options:

dockerd

docker registry

docker cli

container

Answer:

AExplanation:

Docker is a popular containerization platform that includes several components to manage the lifecycle of containers. Let’s analyze each option:

A. dockerd

Correct: The Docker daemon (dockerd) is the core component responsible for building, running, and distributing Docker containers. It manages Docker objects such as images, containers, networks, and volumes, and handles requests from the Docker CLI or API.

B. docker registry

Incorrect: A Docker registry is a repository for storing and distributing Docker images. While it plays a role in distributing containers, it does not build or run them.

C. docker cli

Incorrect: The Docker CLI (Command Line Interface) is a tool used to interact with the Docker daemon (dockerd). It is not responsible for building, running, or distributing containers but rather sends commands to the daemon.

D. container

Incorrect: A container is an instance of a running application created from a Docker image. It is not a component of Docker but rather the result of the Docker daemon's operations.

Why dockerd?

Central Role: The Docker daemon (dockerd) is the backbone of the Docker platform, managing all aspects of container lifecycle management.

Integration: It interacts with the host operating system and container runtime to execute tasks like building images, starting containers, and managing resources.

JNCIA Cloud References:

The JNCIA-Cloud certification covers Docker as part of its containerization curriculum. Understanding the role of the Docker daemon is essential for managing containerized applications in cloud environments.

For example, Juniper Contrail integrates with Docker to provide advanced networking and security features for containerized workloads, relying on the Docker daemon to manage containers.

Which feature of Linux enables kernel-level isolation of global resources?

Options:

ring protection

stack protector

namespaces

shared libraries

Answer:

CExplanation:

Linux provides several mechanisms for isolating resources and ensuring security. Let’s analyze each option:

A. ring protection

Incorrect:Ring protection refers to CPU privilege levels (e.g., Rings 0–3) that control access to system resources. While important for security, it does not provide kernel-level isolation of global resources.

B. stack protector

Incorrect:Stack protector is a compiler feature that helps prevent buffer overflow attacks by adding guard variables to function stacks. It is unrelated to resource isolation.

C. namespaces

Correct:Namespaces are a Linux kernel feature that provideskernel-level isolationof global resources such as process IDs, network interfaces, mount points, and user IDs. Each namespace has its own isolated view of these resources, enabling features like containerization.

D. shared libraries

Incorrect:Shared libraries allow multiple processes to use the same code, reducing memory usage. They do not provide isolation or security.

Why Namespaces?

Resource Isolation:Namespaces isolate processes, networks, and other resources, ensuring that changes in one namespace do not affect others.

Containerization Foundation:Namespaces are a core technology behind containerization platforms like Docker and Kubernetes, enabling lightweight and secure environments.

JNCIA Cloud References:

The JNCIA-Cloud certification covers Linux fundamentals, including namespaces, as part of its containerization curriculum. Understanding namespaces is essential for managing containerized workloads in cloud environments.

For example, Juniper Contrail leverages namespaces to isolate network resources in containerized environments, ensuring secure and efficient operation.

You want to limit the memory, CPU, and network utilization of a set of processes running on a Linux host.

Which Linux feature would you configure in this scenario?

You want to limit the memory, CPU, and network utilization of a set of processes running on a Linux host.

Which Linux feature would you configure in this scenario?

Options:

virtual routing and forwarding instances

network namespaces

control groups

slicing

Answer:

CExplanation:

Linux provides several features to manage system resources and isolate processes. Let’s analyze each option:

A. virtual routing and forwarding instances

Incorrect:Virtual Routing and Forwarding (VRF) is a networking feature used to create multiple routing tables on a single router or host. It is unrelated to limiting memory, CPU, or network utilization for processes.

B. network namespaces

Incorrect:Network namespaces are used to isolate network resources (e.g., interfaces, routing tables) for processes. While they can help with network isolation, they do not directly limit memory or CPU usage.

C. control groups

Correct: Control Groups (cgroups)are a Linux kernel feature that allows you to limit, account for, and isolate the resource usage (CPU, memory, disk I/O, network) of a set of processes. cgroups are commonly used in containerization technologies like Docker and Kubernetes to enforce resource limits.

D. slicing

Incorrect:"Slicing" is not a recognized Linux feature for resource management. This term may refer to dividing resources in other contexts but is not relevant here.

Why Control Groups?

Resource Management:cgroups provide fine-grained control over memory, CPU, and network utilization, ensuring that processes do not exceed their allocated resources.

Containerization Foundation:cgroups are a core technology behind container runtimes likecontainerdand orchestration platforms like Kubernetes.

JNCIA Cloud References:

The JNCIA-Cloud certification covers Linux features like cgroups as part of its containerization curriculum. Understanding cgroups is essential for managing resource allocation in cloud environments.

For example, Juniper Contrail integrates with Kubernetes to manage containerized workloads, leveraging cgroups to enforce resource limits.

Your e-commerce application is deployed on a public cloud. As compared to the rest of the year, it receives substantial traffic during the Christmas season.

In this scenario, which cloud computing feature automatically increases or decreases the resource based on the demand?

Options:

resource pooling

on-demand self-service

rapid elasticity

broad network access

Answer:

CExplanation:

Cloud computing provides several key characteristics that enable flexible and scalable resource management. Let’s analyze each option:

A. resource pooling

Incorrect: Resource pooling refers to the sharing of computing resources (e.g., storage, processing power) among multiple users or tenants. While important, it does not directly address the automatic scaling of resources based on demand.

B. on-demand self-service

Incorrect: On-demand self-service allows users to provision resources (e.g., virtual machines, storage) without requiring human intervention. While this is a fundamental feature of cloud computing, it does not describe the ability to automatically scale resources.

C. rapid elasticity

Correct: Rapid elasticity is the ability of a cloud environment to dynamically increase or decrease resources based on demand. This ensures that applications can scale up during peak traffic periods (e.g., Christmas season) and scale down during low-demand periods, optimizing cost and performance.

D. broad network access

Incorrect: Broad network access refers to the ability to access cloud services over the internet from various devices. While essential for accessibility, it does not describe the scaling of resources.

Why Rapid Elasticity?

Dynamic Scaling: Rapid elasticity ensures that resources are provisioned or de-provisioned automatically to meet changing workload demands.

Cost Efficiency: By scaling resources only when needed, organizations can optimize costs while maintaining performance.

JNCIA Cloud References:

The JNCIA-Cloud certification emphasizes the key characteristics of cloud computing, including rapid elasticity. Understanding this concept is essential for designing scalable and cost-effective cloud architectures.

For example, Juniper Contrail supports cloud elasticity by enabling dynamic provisioning of network resources in response to changing demands.

You are asked to provision a bare-metal server using OpenStack.

Which service is required to satisfy this requirement?

Options:

Ironic

Zun

Trove

Magnum

Answer:

AExplanation:

OpenStack is an open-source cloud computing platform that provides various services for managing compute, storage, and networking resources. To provision abare-metal serverin OpenStack, theIronicservice is required. Let’s analyze each option:

A. Ironic

Correct:OpenStack Ironic is a bare-metal provisioning service that allows you to manage and provision physical servers as if they were virtual machines. It automates tasks such as hardware discovery, configuration, and deployment of operating systems on bare-metal servers.

B. Zun

Incorrect:OpenStack Zun is a container service that manages the lifecycle of containers. It is unrelated to bare-metal provisioning.

C. Trove

Incorrect:OpenStack Trove is a Database as a Service (DBaaS) solution that provides managed database instances. It does not handle bare-metal provisioning.

D. Magnum

Incorrect:OpenStack Magnum is a container orchestration service that supports Kubernetes, Docker Swarm, and other container orchestration engines. It is focused on containerized workloads, not bare-metal servers.

Why Ironic?

Purpose-Built for Bare-Metal:Ironic is specifically designed to provision and manage bare-metal servers, making it the correct choice for this requirement.

Automation:Ironic automates the entire bare-metal provisioning process, including hardware discovery, configuration, and OS deployment.

JNCIA Cloud References:

The JNCIA-Cloud certification covers OpenStack as part of its cloud infrastructure curriculum. Understanding OpenStack services like Ironic is essential for managing bare-metal and virtualized environments in cloud deployments.

For example, Juniper Contrail integrates with OpenStack to provide networking and security for both virtualized and bare-metal workloads. Proficiency with OpenStack services ensures efficient management of diverse cloud resources.

Your organization manages all of its sales through the Salesforce CRM solution.

In this scenario, which cloud service model are they using?

Options:

Storage as a Service (STaas)

Software as a Service (Saa

Platform as a Service (Paa)

Infrastructure as a Service (IaaS)

Answer:

BExplanation:

Cloud service models define how services are delivered and managed in a cloud environment. The three primary models are:

Infrastructure as a Service (IaaS):Provides virtualized computing resources such as servers, storage, and networking over the internet. Examples include Amazon EC2 and Microsoft Azure Virtual Machines.

Platform as a Service (PaaS):Provides a platform for developers to build, deploy, and manage applications without worrying about the underlying infrastructure. Examples include Google App Engine and Microsoft Azure App Services.

Software as a Service (SaaS):Delivers fully functional applications over the internet, eliminating the need for users to install or maintain software locally. Examples include Salesforce CRM, Google Workspace, and Microsoft Office 365.

In this scenario, the organization is using Salesforce CRM, which is a SaaS solution. Salesforce provides a complete customer relationship management (CRM) application that is accessible via a web browser, with no need for the organization to manage the underlying infrastructure or application code.

Why SaaS?

No Infrastructure Management:The customer does not need to worry about provisioning servers, databases, or networking components.

Fully Managed Application:Salesforce handles updates, patches, and maintenance, ensuring the application is always up-to-date.

Accessibility:Users can access Salesforce CRM from any device with an internet connection.

JNCIA Cloud References:

The JNCIA-Cloud certification emphasizes understanding the different cloud service models and their use cases. SaaS is particularly relevant in scenarios where organizations want to leverage pre-built applications without the complexity of managing infrastructure or development platforms.

For example, Juniper’s cloud solutions often integrate with SaaS platforms like Salesforce to provide secure connectivity and enhanced functionality. Understanding the role of SaaS in cloud architectures is essential for designing and implementing cloud-based solutions.

Which two statements are correct about Kubernetes resources? (Choose two.)

Options:

A ClusterIP type service can only be accessed within a Kubernetes cluster.

A daemonSet ensures that a replica of a pod is running on all nodes.

A deploymentConfig is a Kubernetes resource.

NodePort service exposes the service externally by using a cloud provider load balancer.

Answer:

A, BExplanation:

Kubernetes resources are the building blocks of Kubernetes clusters, enabling the deployment and management of applications. Let’s analyze each statement:

A. A ClusterIP type service can only be accessed within a Kubernetes cluster.

Correct:

AClusterIPservice is the default type of Kubernetes service. It exposes the service internally within the cluster, assigning it a virtual IP address that is accessible only to other pods or services within the same cluster. External access is not possible with this service type.

B. A daemonSet ensures that a replica of a pod is running on all nodes.

Correct:

AdaemonSetensures that a copy of a specific pod is running on every node in the cluster (or a subset of nodes if specified). This is commonly used for system-level tasks like logging agents or monitoring tools that need to run on all nodes.

C. A deploymentConfig is a Kubernetes resource.

Incorrect:

deploymentConfigis a concept specific to OpenShift, not standard Kubernetes. In Kubernetes, the equivalent resource is called aDeployment, which manages the desired state of pods and ReplicaSets.

D. NodePort service exposes the service externally by using a cloud provider load balancer.

Incorrect:

ANodePortservice exposes the service on a static port on each node in the cluster, allowing external access via the node's IP address and the assigned port. However, it does not use a cloud provider load balancer. TheLoadBalancerservice type is the one that leverages cloud provider load balancers for external access.

Why These Statements?

ClusterIP:Ensures internal-only communication, making it suitable for backend services that do not need external exposure.

DaemonSet:Guarantees that a specific pod runs on all nodes, ensuring consistent functionality across the cluster.

JNCIA Cloud References:

The JNCIA-Cloud certification covers Kubernetes resources and their functionalities, including services, DaemonSets, and Deployments. Understanding these concepts is essential for managing Kubernetes clusters effectively.

For example, Juniper Contrail integrates with Kubernetes to provide advanced networking features for services and DaemonSets, ensuring seamless operation of distributed applications.

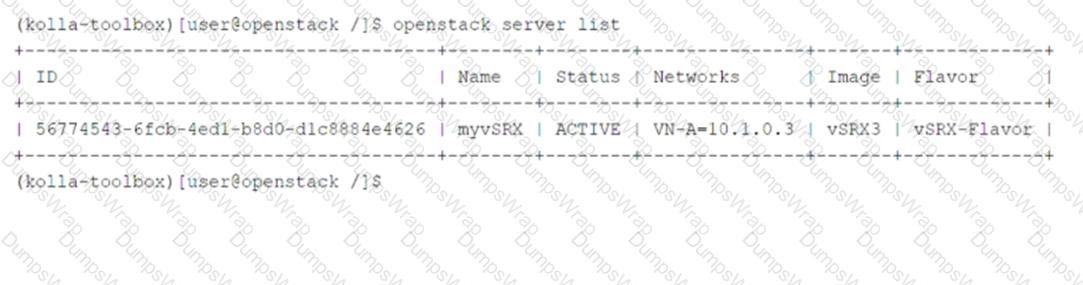

Click to the Exhibit button.

Referring to the exhibit, which two statements are correct? (Choose two.)

Options:

The myvSRX instance is using a default image.

The myvSRX instance is a part of a default network.

The myvSRX instance is created using a custom flavor.

The myvSRX instance is currently running.

Answer:

C, DExplanation:

Theopenstack server listcommand provides information about virtual machine (VM) instances in the OpenStack environment. Let’s analyze the exhibit and each statement:

Key Information from the Exhibit:

The output shows details about themyvSRXinstance:

Status: ACTIVE(indicating the instance is running).

Networks: VN-A-10.1.0.3(indicating the instance is part of a specific network).

Image: vSRX3(indicating the instance was created using a custom image).

Flavor: vSRX-Flavor(indicating the instance was created using a custom flavor).

Option Analysis:

A. The myvSRX instance is using a default image.

Incorrect:The image namevSRX3suggests that this is a custom image, not the default image provided by OpenStack.

B. The myvSRX instance is a part of a default network.

Incorrect:The network nameVN-A-10.1.0.3indicates that the instance is part of a specific network, not the default network.

C. The myvSRX instance is created using a custom flavor.

Correct:The flavor namevSRX-Flavorindicates that the instance was created using a custom flavor, which defines the CPU, RAM, and disk space properties.

D. The myvSRX instance is currently running.

Correct:TheACTIVEstatus confirms that the instance is currently running.

Why These Statements?

Custom Flavor:ThevSRX-Flavorname clearly indicates that a custom flavor was used to define the instance's resource allocation.

Running Instance:TheACTIVEstatus confirms that the instance is operational and available for use.

JNCIA Cloud References:

The JNCIA-Cloud certification emphasizes understanding OpenStack commands and outputs, including theopenstack server listcommand. Recognizing how images, flavors, and statuses are represented is essential for managing VM instances effectively.

For example, Juniper Contrail integrates with OpenStack Nova to provide advanced networking features for VMs, ensuring seamless operation based on their configurations.

Which OpenShift resource represents a Kubernetes namespace?

Options:

Project

ResourceQuota

Build

Operator

Answer:

AExplanation:

OpenShift is a Kubernetes-based container platform that introduces additional abstractions and terminologies. Let’s analyze each option:

A. Project

Correct:

In OpenShift, aProjectrepresents a Kubernetes namespace with additional capabilities. It provides a logical grouping of resources and enables multi-tenancy by isolating resources between projects.

B. ResourceQuota

Incorrect:

AResourceQuotais a Kubernetes object that limits the amount of resources (e.g., CPU, memory) that can be consumed within a namespace. While it is used within a project, it is not the same as a namespace.

C. Build

Incorrect:

ABuildis an OpenShift-specific resource used to transform source code into container images. It is unrelated to namespaces or projects.

D. Operator

Incorrect:

AnOperatoris a Kubernetes extension that automates the management of complex applications. It operates within a namespace but does not represent a namespace itself.

Why Project?

Namespace Abstraction:OpenShift Projects extend Kubernetes namespaces by adding features like user roles, quotas, and lifecycle management.

Multi-Tenancy:Projects enable organizations to isolate workloads and resources for different teams or applications.

JNCIA Cloud References:

The JNCIA-Cloud certification covers OpenShift and its integration with Kubernetes. Understanding the relationship between Projects and namespaces is essential for managing OpenShift environments.

For example, Juniper Contrail integrates with OpenShift to provide advanced networking and security features for Projects, ensuring secure and efficient resource isolation.

You must install a basic Kubernetes cluster.

Which tool would you use in this situation?

Options:

kubeadm

kubectl apply

kubectl create

dashboard

Answer:

AExplanation:

To install a basic Kubernetes cluster, you need a tool that simplifies the process of bootstrapping and configuring the cluster. Let’s analyze each option:

A. kubeadm

Correct:

kubeadmis a command-line tool specifically designed to bootstrap a Kubernetes cluster. It automates the process of setting up the control plane and worker nodes, making it the most suitable choice for installing a basic Kubernetes cluster.

B. kubectl apply

Incorrect:

kubectl applyis used to deploy resources (e.g., pods, services) into an existing Kubernetes cluster by applying YAML or JSON manifests. It does not bootstrap or install a new cluster.

C. kubectl create

Incorrect:

kubectl createis another Kubernetes CLI command used to create resources in an existing cluster. Likekubectl apply, it does not handle cluster installation.

D. dashboard

Incorrect:

The Kubernetesdashboardis a web-based UI for managing and monitoring a Kubernetes cluster. It requires an already-installed cluster and cannot be used to install one.

Why kubeadm?

Cluster Bootstrapping: kubeadmprovides a simple and standardized way to initialize a Kubernetes cluster, including setting up the control plane and joining worker nodes.

Flexibility:While it creates a basic cluster, it allows for customization and integration with additional tools like CNI plugins.

JNCIA Cloud References:

The JNCIA-Cloud certification covers Kubernetes installation methods, includingkubeadm. Understanding how to usekubeadmis essential for deploying and managing Kubernetes clusters effectively.

For example, Juniper Contrail integrates with Kubernetes clusters created usingkubeadmto provide advanced networking and security features.

Which two tools are used to deploy a Kubernetes environment for testing and development purposes? (Choose two.)

Options:

OpenStack

kind

oc

minikube

Answer:

B, DExplanation:

Kubernetes is a popular container orchestration platform used for deploying and managing containerized applications. Several tools are available for setting up Kubernetes environments for testing and development purposes. Let’s analyze each option:

A. OpenStack

Incorrect: OpenStack is an open-source cloud computing platform used for managing infrastructure resources (e.g., compute, storage, networking). It is not specifically designed for deploying Kubernetes environments.

B. kind

Correct: kind (Kubernetes IN Docker) is a tool for running local Kubernetes clusters using Docker containers as nodes. It is lightweight and ideal for testing and development purposes.

C. oc

Incorrect: oc is the command-line interface (CLI) for OpenShift, a Kubernetes-based container platform. While OpenShift can be used to deploy Kubernetes environments, oc itself is not a tool for setting up standalone Kubernetes clusters.

D. minikube

Correct: minikube is a tool for running a single-node Kubernetes cluster locally on your machine. It is widely used for testing and development due to its simplicity and ease of setup.

Why These Tools?

kind: Ideal for simulating multi-node Kubernetes clusters in a lightweight environment.

minikube: Perfect for beginners and developers who need a simple, single-node Kubernetes cluster for experimentation.

JNCIA Cloud References:

The JNCIA-Cloud certification covers Kubernetes as part of its container orchestration curriculum. Tools like kind and minikube are essential for learning and experimenting with Kubernetes in local environments.

For example, Juniper Contrail integrates with Kubernetes to provide advanced networking and security features for containerized workloads. Proficiency with Kubernetes tools ensures effective operation and troubleshooting.