SAP Certified Associate - SAP Generative AI Developer Questions and Answers

Which of the following is a principle of effective prompt engineering?

Options:

Use precise language and providing detailed context in prompts.

Combine multiple complex tasks into a single prompt.

Keep prompts as short as possible to avoid confusion.

Write vague and open-ended instructions to encourage creativity.

Answer:

AExplanation:

Effective prompt engineering is crucial for guiding AI models to produce accurate and relevant outputs.

1. Importance of Precision and Context:

Clarity:Using precise language in prompts minimizes ambiguity, ensuring the AI model comprehends the exact requirements.

Detailed Context:Providing comprehensive context helps the model understand the background and nuances of the task, leading to more accurate and tailored responses.

2. Best Practices in Prompt Engineering:

Specificity:Clearly define the desired outcome, including any constraints or specific formats required.

Instruction Inclusion:Incorporate explicit instructions within the prompt to guide the model's behavior effectively.

Avoiding Ambiguity:Steer clear of vague or open-ended language that could lead to varied interpretations.

3. Benefits of Effective Prompt Engineering:

Enhanced Output Quality:Well-crafted prompts lead to responses that closely align with user expectations.

Efficiency:Reduces the need for iterative refinements, saving time and computational resources.

Which of the following are features of the SAP AI Foundation? Note: There are 2 correct answers to this question.

Options:

Ready-to-use Al services

Al runtimes and lifecycle management

Open source Al model repository

Joule integration in SAP SuccessFactors

Answer:

A, BExplanation:

SAP AI Foundation is an all-in-one AI toolkit that provides developers with the necessary tools to build AI-powered extensions and applications on SAP Business Technology Platform (SAP BTP).

1. Ready-to-Use AI Services:

Pre-Built AI Capabilities:AI Foundation offers a suite of ready-to-use AI services, enabling developers to integrate AI functionalities into their applications without the need to build models from scratch. These services include capabilities such as document information extraction, translation, and personalized recommendations.

2. AI Runtimes and Lifecycle Management:

Comprehensive AI Management:AI Foundation provides tools for managing AI runtimes and the entire AI lifecycle, including model deployment, monitoring, and maintenance. This ensures that AI models operate efficiently and remain up-to-date, facilitating seamless integration into business processes.

3. Integration with SAP BTP:

Unified Platform:By integrating with SAP BTP, AI Foundation allows for the development of AI solutions that are grounded in business data and context, ensuring relevance and reliability in AI-driven applications.

What contract type does SAP offer for Al ecosystem partner solutions?

Options:

Annual subscription-only contracts

All-in-one contracts, with services that are contracted through SAP

Pay-as-you-go for each partner service

Bring Your Own License (BYOL) for embedded partner solutions

Answer:

BExplanation:

SAP collaborates with a wide ecosystem of partners, including leading general-purpose AI vendors, to provide tailored solutions to its customers. Through the SAP Store, customers have access to numerous partner applications and a variety of tools, allowing them to choose solutions that best fit their requirements.

Contractual Approach:

All-in-One Contracts:SAP offers all-in-one contracts for AI ecosystem partner solutions, where services are white-labeled and contracted directly through SAP. This approach simplifies the procurement process for customers, as they engage with SAP as the single point of contact for both SAP and partner services.

Exclusion of Bring Your Own License (BYOL) Model:SAP does not adopt a "bring your own license" model for these embedded partner solutions. Instead, all services are integrated and provided under unified contracts managed by SAP.

Benefits of This Contractual Model:

Simplified Procurement:Customers benefit from a streamlined purchasing process, dealing with a single contract and point of contact for multiple services.

Integrated Solutions:The all-in-one contract ensures that partner solutions are seamlessly integrated with SAP's offerings, providing a cohesive experience.

Assured Compliance and Support:By contracting through SAP, customers can be confident in the compliance, security, and support standards upheld across all services.

What does the Prompt Management feature of the SAP AI launchpad allow users to do?

Options:

Create and edit prompts

Provide personalized user interactions

Interact with models through a conversational interface

Access and manage saved prompts and their versions

Answer:

A, DExplanation:

The Prompt Management feature within SAP AI Launchpad's Generative AI Hub offers users comprehensive tools for handling prompts throughout their lifecycle:

1. Create and Edit Prompts:

Prompt Editor:Users can utilize the Prompt Editor to craft and modify prompts, facilitating effective prompt engineering and experimentation.

2. Access and Manage Saved Prompts and Their Versions:

Prompt Lifecycle Management:The platform provides capabilities to manage the lifecycle of prompts, including accessing saved prompts, tracking their versions, and organizing them for efficient reuse and iteration.

Conclusion:

SAP AI Launchpad's Prompt Management feature empowers users to create, edit, and manage prompts effectively, supporting robust prompt engineering and lifecycle management within the Generative AI Hub.

What does SAP recommend you do before you start training a machine learning model in SAP AI Core? Note: There are 3 correct answers to this question.

Options:

Configure the training pipeline using templates.

Define the required infrastructure resources for training.

Perform manual data integration with SAP HANA.

Configure the model deployment in SAP Al Launchpad.

Register the input dataset in SAP AI Core.

Answer:

A, B, EExplanation:

Before initiating the training of a machine learning model in SAP AI Core, SAP recommends the following steps:

Configure the training pipeline using templates:Utilize predefined templates to set up the training pipeline, ensuring consistency and efficiency in the training process.

Define the required infrastructure resources for training:Specify the computational resources, such as CPUs or GPUs, necessary for the training job to ensure optimal performance.

Register the input dataset in SAP AI Core:Ensure that the dataset intended for training is properly registered within SAP AI Core, facilitating seamless access during the training process.

These preparatory steps are crucial for the successful training of machine learning models within the SAP AI Core environment.

Which neural network architecture is primarily used by LLMs?

Options:

Transformer architecture with self-attention mechanisms

Recurrent neural network architecture

Convolutional Neural Networks (CNNs)

Sequential encoder-decoder architecture

Answer:

AExplanation:

Large Language Models (LLMs) primarily utilize the Transformer architecture, which incorporates self-attention mechanisms.

1. Transformer Architecture:

Overview:Introduced in 2017, the Transformer architecture revolutionized natural language processing by enabling models to handle long-range dependencies in text more effectively than previous architectures.

GeeksforGeeks

Components:The Transformer consists of an encoder-decoder structure, where the encoder processes input sequences, and the decoder generates output sequences.

2. Self-Attention Mechanisms:

Functionality:Self-attention allows the model to weigh the importance of different words in a sequence relative to each other, enabling it to capture contextual relationships regardless of their position.

Benefits:This mechanism facilitates parallel processing of input data, improving computational efficiency and performance in understanding complex language patterns.

3. Application in LLMs:

Model Examples:LLMs such as GPT-3 and BERT are built upon the Transformer architecture, leveraging self-attention to process and generate human-like text.

Advantages:The Transformer architecture's ability to manage extensive context and dependencies makes it well-suited for tasks like language translation, summarization, and question-answering.

How can Joule improve workforce productivity? Note: There are 2 correct answers to this question.

Options:

By maintaining strict adherence to data privacy regulations.

By resolving hardware malfunctions.

By offering generic task recommendations unrelated to specific roles.

By providing context-based role-specific task assistance.

Answer:

A, DExplanation:

SAP's AI copilot, Joule, enhances workforce productivity through several key features:

1. Adherence to Data Privacy Regulations:

Data Security and Privacy:Joule is designed with a strong emphasis on data security and privacy, ensuring compliance with data protection regulations. This adherence builds user trust and allows employees to utilize AI tools confidently, knowing that their data is handled responsibly.

2. Context-Based Role-Specific Task Assistance:

Personalized Assistance:Joule provides context-aware support tailored to individual roles within an organization. By understanding the specific needs and responsibilities of each user, Joule offers relevant insights and automates routine tasks, thereby enhancing efficiency and allowing employees to focus on higher-value activities.

What are some benefits of using an SDK for evaluating prompts within the context of generative Al? Note: There are 3 correct answers to this question.

Options:

Maintaining data privacy by using data masking techniques

Creating custom evaluators that meet specific business needs

Automating prompt testing across various scenarios

Supporting low code evaluations using graphical user interface

Providing metrics to quantitatively assess response quality

Answer:

B, C, EExplanation:

Utilizing an SDK for evaluating prompts within the context of generative AI offers several benefits:

1. Creating Custom Evaluators That Meet Specific Business Needs:

Tailored Evaluation Metrics:An SDK allows developers to design and implement custom evaluation metrics that align with specific business objectives, ensuring that prompt assessments are relevant and meaningful.

Flexibility in Evaluation Criteria:Developers can define criteria that reflect the unique requirements of their applications, leading to more accurate and business-aligned evaluations.

2. Automating Prompt Testing Across Various Scenarios:

Scalability:An SDK enables the automation of prompt testing across multiple scenarios, facilitating large-scale evaluations without manual intervention.

Consistency:Automated testing ensures consistent application of evaluation criteria, reducing the potential for human error and increasing reliability.

3. Providing Metrics to Quantitatively Assess Response Quality:

Objective Assessment:The SDK can generate quantitative metrics, such as accuracy, relevance, and coherence scores, providing an objective basis for evaluating prompt performance.

Performance Monitoring:These metrics enable continuous monitoring and improvement of prompt quality, ensuring that AI models deliver optimal results.

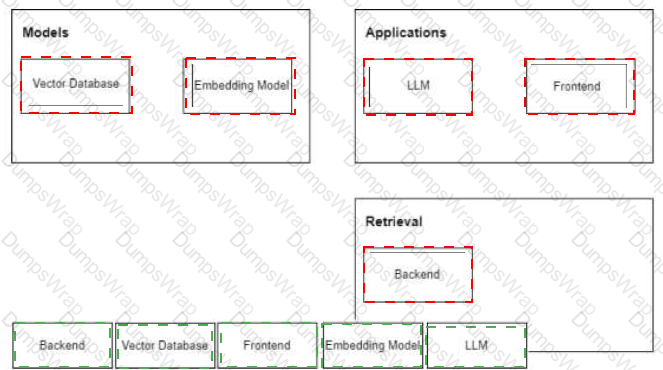

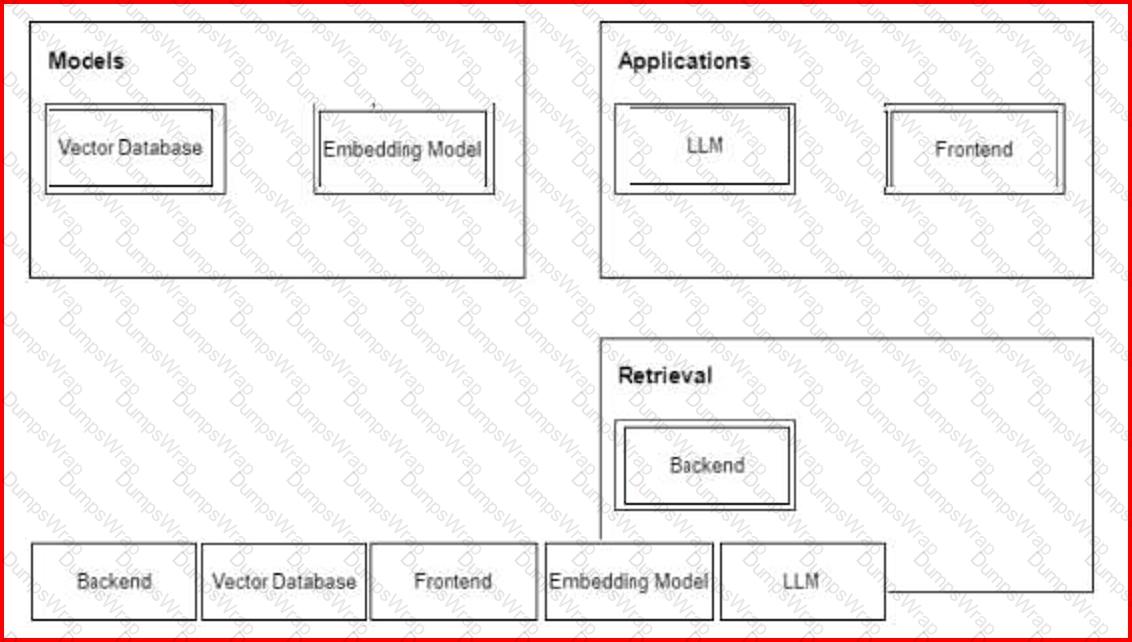

Match the components of a Retrieval Augmented Generation architecture to the diagram.

Options:

Answer:

Explanation:

A screenshot of a computer

Description automatically generated

A screenshot of a computer

Description automatically generated

Which of the following steps must be performed to deploy LLMs in the generative Al hub?

Options:

Run the booster

•Create service keys

•Select the executable ID

Provision SAP AI Core

•Check for foundation model scenario

•Create a configuration

•Create a deployment

Check for foundation model scenario

•Create a deployment

•Configuring entitlements

Provision SAP AI

•Core Create a configuration

•Run the booster

Answer:

BExplanation:

Deploying Large Language Models (LLMs) in SAP's Generative AI Hub involves a structured process:

1. Provision SAP AI Core:

Setup:Ensure that SAP AI Core is provisioned in your SAP Business Technology Platform (BTP) account to manage AI workloads.

2. Check for Foundation Model Scenario:

Validation:Verify the availability of the foundation model scenario within SAP AI Core to confirm that the necessary resources and configurations are in place for deploying LLMs.

3. Create a Configuration:

Configuration Setup:Define the parameters and settings required for the LLM deployment, including model specifications and resource allocations.

4. Create a Deployment:

Deployment Execution:Initiate the deployment process within SAP AI Core, making the LLM available for integration and use within your applications.

What are some use cases for fine-tuning of a model? Note: There are 2 correct answers to this question.

Options:

To introduce new knowledge to a model in a resource-efficient way

To quickly create iterations on a new use case

To sanitize model outputs

To customize outputs for specific types of inputs

Answer:

A, DWhat is a part of LLM context optimization?

Options:

Reducing the model's size to improve efficiency

Adjusting the model's output format and style

Enhancing the computational speed of the model

Providing the model with domain-specific knowledge needed to solve a problem

Answer:

DExplanation:

LLM context optimization involves tailoring a Large Language Model's (LLM) input context to enhance its performance on specific tasks, particularly by incorporating domain-specific knowledge.

1. Understanding LLM Context Optimization:

Definition:Context optimization refers to the process of adjusting the input provided to an LLM to ensure it includes relevant information, thereby enabling the model to generate more accurate and contextually appropriate outputs.

Domain-Specific Knowledge Integration:By embedding domain-specific information into the model's context, the LLM can better understand and address specialized queries, leading to improved problem-solving capabilities.

2. Importance of Domain-Specific Knowledge:

Enhanced Relevance:Providing domain-specific context ensures that the model'sresponses are pertinent to the particular field or subject matter, increasing the utility of the generated content.

Improved Accuracy:With access to specialized knowledge, the LLLM is less likely to produce generic or incorrect answers, thereby enhancing the overall quality of its outputs.

3. Methods of Context Optimization:

Prompt Engineering:Crafting prompts that include necessary domain-specific information to guide the model towards generating desired responses.

Retrieval-Augmented Generation (RAG):Incorporating external data sources into the model's context to provide up-to-date and relevant information pertinent to the domain.

Which technique is used to supply domain-specific knowledge to an LLM?

Options:

Domain-adaptation training

Prompt template expansion

Retrieval-Augmented Generation

Fine-tuning the model on general data

Answer:

CExplanation:

Retrieval-Augmented Generation (RAG) is a technique that enhances Large Language Models (LLMs) by integrating external domain-specific knowledge, enabling more accurate and contextually relevant outputs.

1. Understanding Retrieval-Augmented Generation (RAG):

Definition:RAG combines the generative capabilities of LLMs with retrieval mechanisms that access external knowledge bases or documents. This integration allows the model to incorporate up-to-date and domain-specific information into its responses.

Mechanism:When presented with a query, the RAG system retrieves pertinent information from external sources and uses this data to inform and generate a more accurate and contextually appropriate response.

2. Application in Supplying Domain-Specific Knowledge:

Domain Adaptation:By leveraging RAG, LLMs can access specialized information without the need for extensive retraining or fine-tuning. This approach is particularly beneficial for domains with rapidly evolving information or where incorporating proprietary data is essential.

Efficiency:RAG enables models to provide informed responses by referencing external data, reducing the necessity for large-scale domain-specific training datasets and thereby conserving computational resources.

3. Advantages of Using RAG:

Up-to-Date Information:Since RAG systems can query current data sources, they are capable of providing the most recent information available, which is crucial in dynamic fields.

Enhanced Accuracy:Incorporating external knowledge allows the model to produce more precise and contextually relevant outputs, especially in specialized domains.

Why would a user include formatting instructions within a prompt?

Options:

To force the model to separate relevant and irrelevant output

To ensure the model's response follows a desired structure or style

To increase the faithfulness of the output

To redirect the output to another software program

Answer:

BExplanation:

Including formatting instructions within a prompt is a technique used in prompt engineering to guide AI models, such as Large Language Models (LLMs), to produce outputs that adhere to a specific structure or style.

1. Purpose of Formatting Instructions in Prompts:

Structured Outputs:By embedding formatting directives within a prompt, users can instruct the AI model to generate responses in a predetermined format, such as JSON, XML, or tabular data. This is particularly useful when the output needs to be machine-readable or integrated into other applications.

Consistent Style:Formatting instructions can also dictate the stylistic elements of the response, ensuring consistency in tone, language, or presentation, which is essential for maintaining brand voice or meeting specific communication standards.

2. Implementation in SAP's Generative AI Hub:

Prompt Management:SAP's Generative AI Hub offers tools for creating and managing prompts, allowing developers to include specific formatting instructions to control the output of AI models effectively.

Prompt Editor and Management:The hub provides features like prompt editors, enabling users to experiment with different prompts and formatting instructions to achieve optimal results for their specific use cases.

3. Benefits of Using Formatting Instructions:

Enhanced Usability:Well-formatted outputs are easier to interpret and can be directly utilized in various applications without additional processing.

Improved Integration:Structured responses facilitate seamless integration with other systems, APIs, or workflows, enhancing overall efficiency.

Reduced Ambiguity:Clear formatting guidelines minimize the risk of ambiguous outputs, ensuring that the AI model's responses meet user expectations precisely.

What is the purpose of splitting documents into smaller overlapping chunks in a RAG system?

Options:

To simplify the process of training the embedding model

To enable the matching of different relevant passages to user queries

To improve the efficiency of encoding queries into vector representations

To reduce the storage space required for the vector database

Answer:

BExplanation:

In Retrieval-Augmented Generation (RAG) systems, splitting documents into smaller overlapping chunks is a crucial preprocessing step that enhances the system's ability to match relevant passages to user queries.

1. Purpose of Splitting Documents into Smaller Overlapping Chunks:

Improved Retrieval Accuracy:Dividing documents into smaller, manageable segments allows the system to retrieve the most relevant chunks in response to a user query, thereby improving the precision of the information provided.

Context Preservation:Overlapping chunks ensure that contextual information is maintained across segments, which is essential for understanding the meaning and relevance of each chunk in relation to the query.

2. Benefits of This Approach:

Enhanced Matching:By having multiple overlapping chunks, the system increases the likelihood that at least one chunk will closely match the user's query, leading to more accurate and relevant responses.

Efficient Processing:Smaller chunks are easier to process and analyze, enabling the system to handle large documents more effectively and respond to queries promptly.

How does SAP deal with vulnerability risks created by generative Al? Note: There are 2 correct answers to this question.

Options:

By implementing responsible Al use guidelines and strong product security standards.

By identifying human, technical, and exfiltration risks through an Al Security Taskforce.

By focusing on technological advancement only.

By relying on external vendors to manage security threats.

Answer:

A, BExplanation:

SAP addresses vulnerability risks associated with generative AI through a comprehensive strategy:

1. Implementation of Responsible AI Use Guidelines and Strong Product Security Standards:

AI Ethics Policy:SAP has established an AI Ethics Policy that mandates responsible AI usage, ensuring that AI systems are designed and deployed ethically, with considerations for fairness, transparency, and accountability.

Product Security Standards:SAP integrates robust security measures into its AI products, adhering to stringent security protocols to protect against vulnerabilities and potential threats.

2. Identification of Risks through an AI Security Taskforce:

AI Security Taskforce:SAP has established an AI Security Taskforce dedicated to identifying and mitigating risks associated with generative AI, including human factors, technical vulnerabilities, and data exfiltration threats.

Which of the following is a benefit of using Retrieval Augmented Generation?

Options:

It allows LLMs to access and utilize information beyond their initial training data.

It enables LLMs to learn new languages without additional training.

It eliminates the need for fine-tuning LLMs for specific tasks.

It reduces the computational resources required for language modeling.

Answer:

AExplanation:

Retrieval-Augmented Generation (RAG) enhances Large Language Models (LLMs) by enabling them to access and utilize information beyond their initial training data.

1. Understanding Retrieval-Augmented Generation (RAG):

Definition:RAG combines the generative capabilities of LLMs with retrieval mechanisms that access external knowledge bases or documents. This integration allows the model to incorporate up-to-date and domain-specific information into its responses.

Mechanism:When presented with a query, the RAG system retrieves pertinent information from external sources and uses this data to inform and generate a more accurate and contextually appropriate response.

2. Benefits of RAG:

Access to External Information:RAG allows LLMs to access and utilize information beyond their initial training data, enabling them to provide more accurate and relevant responses.

Up-to-Date Information:Since RAG systems can query current data sources, they are capable of providing the most recent information available, which is crucial in dynamic fields.

Improved Accuracy and Relevance:By leveraging external data, RAG enhances theaccuracy and relevance of the generated content, making it particularly useful for tasks requiring detailed or domain-specific information.

What can be done once the training of a machine learning model has been completed in SAP AICore? Note: There are 2 correct answers to this question.

Options:

The model can be deployed in SAP HANA.

The model's accuracy can be optimized directly in SAP HANA.

The model can be deployed for inferencing.

The model can be registered in the hyperscaler object store.

Answer:

C, DExplanation:

Once the training of a machine learning model has been completed in SAP AI Core, several post-training actions can be undertaken to operationalize and manage the model effectively.

1. Deploying the Model for Inferencing:

Deployment Process:After training, the model can be deployed as a service to handle inference requests. This involves setting up a model server that exposes an endpoint for applications to send data and receive predictions.

Integration:The deployed model can be integrated into business applications, enabling real-time decision-making based on the model's predictions.