ArchiMate® 3 Part 2 Exam Questions and Answers

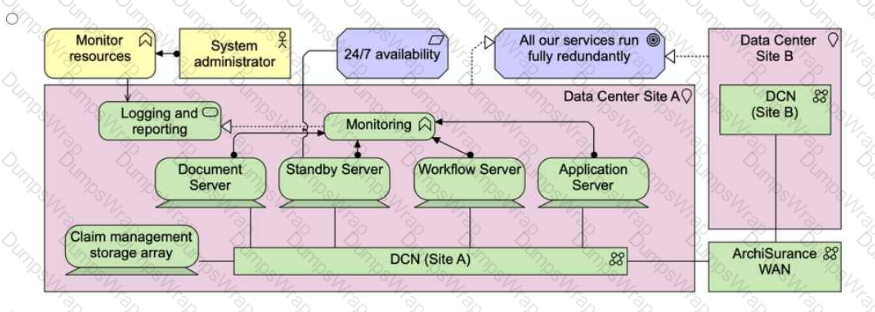

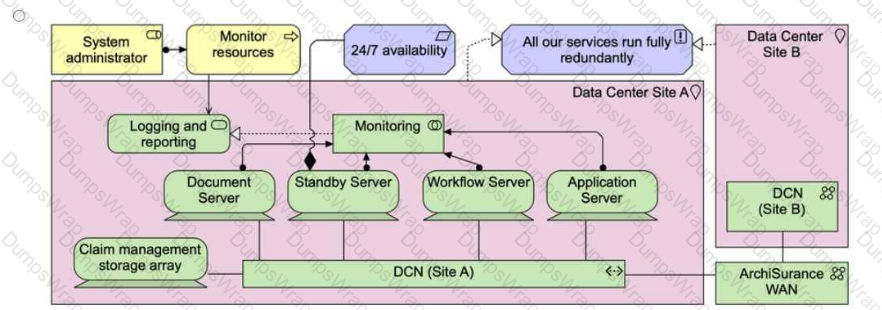

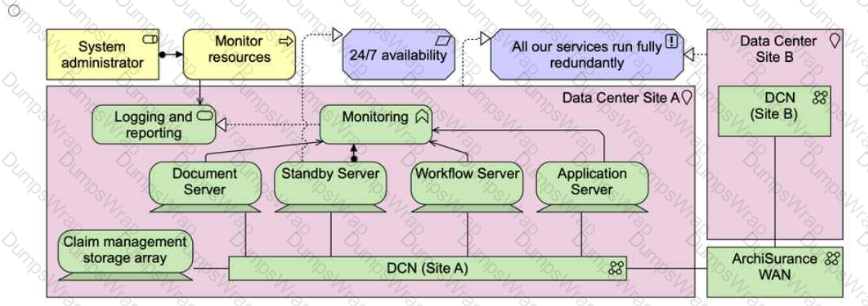

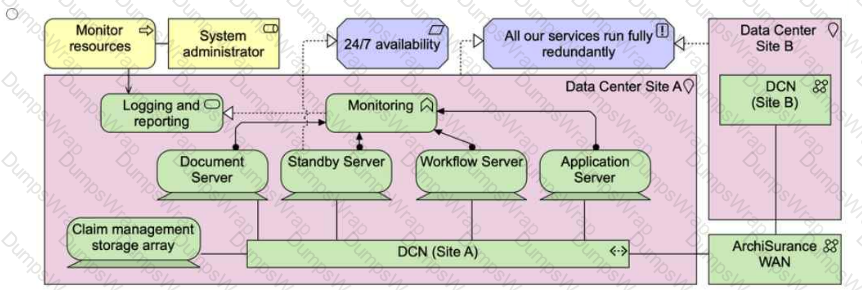

Please read this scenario prior to answering the question

The ArchiSurance enterprise document management solution plays a crucial role in supporting a large number of document types and managing a high volume of document-based transactions each day. Given its business-critical nature, the document

management solution is hosted redundantly across two geographically separate data center sites: Site A and Site B. Both sites are configured identically to ensure seamless operations.

Each site has a highly available data center network (DCN) that connects to the resilient ArchiSurance wide area network (WAN). Each claim management server is connected to its respective site's DCN, forming a converged network that interconnects

servers and storage arrays. A dedicated physical storage array is allocated to the claim management application within each DCN. Additionally, each site houses four powerful physical servers exclusively dedicated to the claim management application.

Among these servers, one remains on standby at any given time, while the other three take on specific roles in hosting the document, workflow, and application engines.

The standby server is responsible for monitoring the behavior of the other servers, providing a logging and reporting service. The active servers regularly transmit data to facilitate this monitoring functionality. In the event of a server failure, the standby

server steps in to perform resource reallocation, replacing the faulty server. However, this task requires manual intervention from a system administrator to reconfigure the logging and reporting service to adapt to the new environment.

Refer to the Scenario

The IT manager has asked you to model the hardware and networks that support the document management solution. This includes capturing the infrastructure components such as data center sites, servers, storage, and networks. Additionally, you

are expected to outline the necessary functionality and services required to enable failover within a server cluster. Given that both data centers share an identical configuration, it is sufficient for Site B to only show the associated networking.

Which of the following is the best answer?

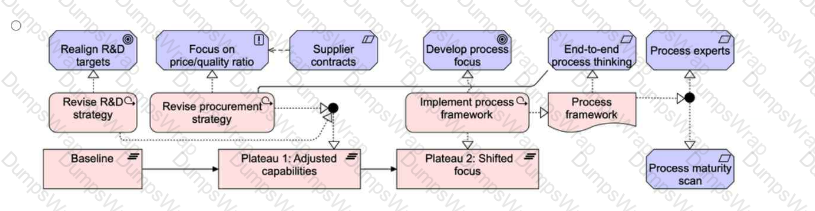

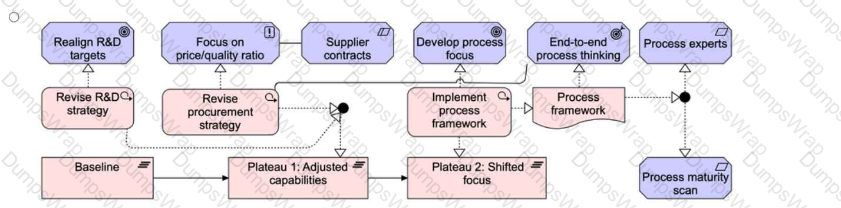

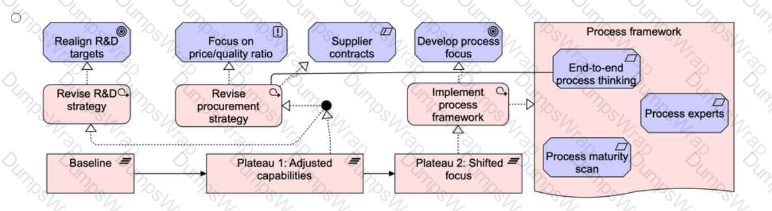

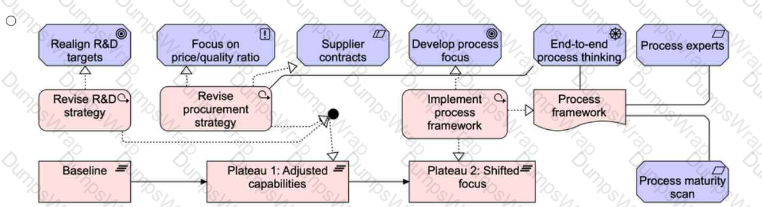

Please read this scenario prior to answering the question

ArchiCar has been a market leader in the premium priced luxury car sector for the last decade. Its product leadership strategy has brought superior products to market, and enabled ArchiCar to achieve premium prices for its cars. This strategy has

been widely successful in the past, but recently competitors have been offering comparable products and taking significant market share. The governing board of ArchiCar has identified opportunities in emerging markets where the ArchiCar brand

is associated with luxury and high performance products, but is thought to be too expensive for mass-market success. Based on this assessment, the board has made the decision to setup a subsidiary company to mass-produce affordable cars

locally. This will be achieved by focusing on a strategy of operational excellence. Such a strategy is ideal for such markets where customers value cost over other factors.

To facilitate this strategic transformation, the project has been divided into multiple phases within a five-year program. The initial phase, known as "Achieving Operational Excellence," is underway. The engineering team has begun devising an

action plan to drive the necessary changes and outlining the technological conditions that must be met. The product architect has identified three current capabilities - industry-leading engineering, high-quality materials sourcing, and cutting-edge

focussed R&D - along with their contributions to the new production philosophy.

Moving forward, it has been determined that two out of the three current capabilities require revision. Materials sourcing needs to be adjusted to meet optimization demands, and R&D targets must align with future goals to enable affordable

production. Additionally, process engineering is introduced as a fourth capability to shift the company's focus from products to a process-oriented approach.

The Enterprise Architecture team has been tasked with migration planning, and identifying key work packages and deliverables. They have identified two transition states between the current and future scenario. The first transition aims to adjust

current capabilities, including revising the R&D approach and procurement strategy. The second transition aims to shift from a product-centric mindset to a process-focused approach and adjust materials sourcing accordingly. It is important to

consider existing supplier contracts that cannot be immediately canceled during this process.

The Enterprise Architecture team has identified that the second transition must implement a process framework, in order to shift to a process focus and meet a number of requirements, including the requirement for end-to-end process thinking. As

this requirement impacts procurement processes, it also impacts the procurement strategy.

Refer to the Scenario

You have been asked to model parts of the overall scenario, including migration planning, the motivations driving the migration, and the work packages necessary to achieve the desired deliverables.

Which of the following answers best describes the scenario?

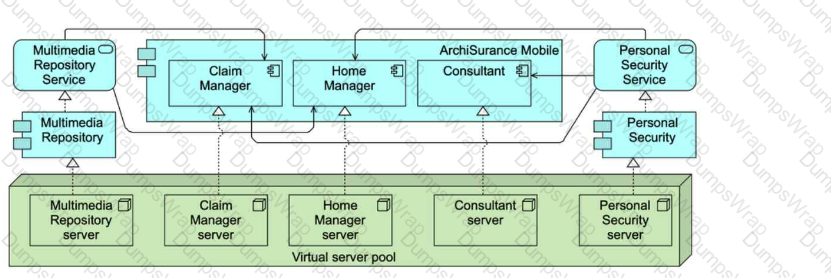

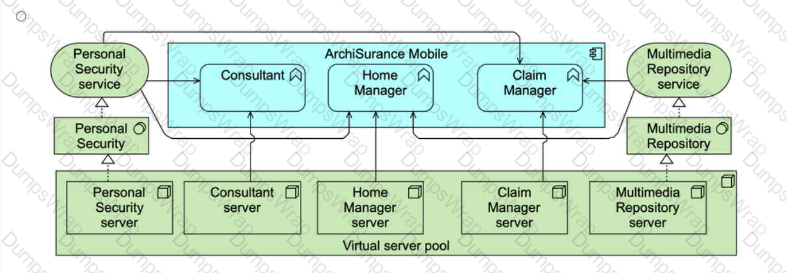

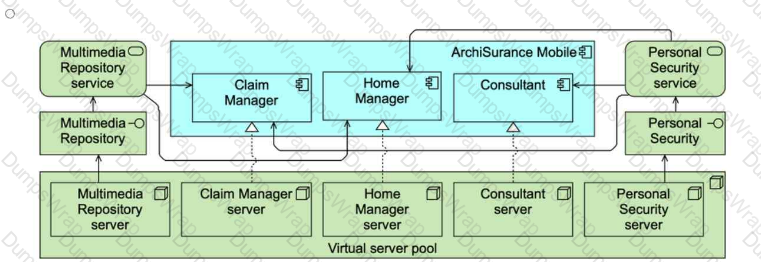

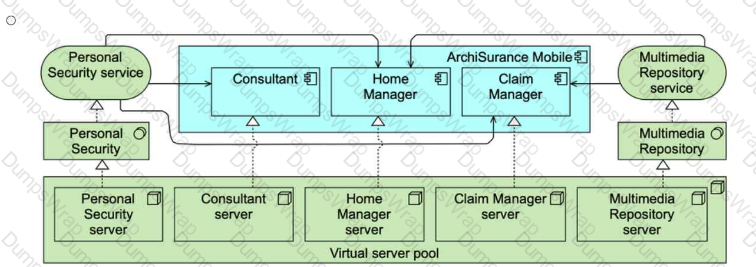

Please read this scenario prior to answering the question

The ArchiSurance Mobile consumer solution is used for selling and renewing insurance products, providing customer service, enabling accurate and convenient home recordkeeping, and capturing and processing claims. The solution consists of

three applications. The Consultant application lets customers review their existing coverage, and update it based on common life events, such as getting a new car, moving into a new home, or having a family member move in or out. If necessary,

they can speak or chat with a customer service representative. The Home Manager application helps customers photograph and catalogue their valuable possessions in order to support the filing of accurate claims in case of loss or damage. The

Claim Manager application enables customers to quickly file a claim for loss or damage to an insured auto, home or possession. It enables customers to describe the incident by referencing information captured with the Consultant and the Home

Manager applications. In addition, it allows the customer to add photographs, audio, video and text to support a claim, submit the claim, and monitor its progress.

The ArchiSurance Mobile applications rely on a number of application services hosted by ArchiSurance. The first is an Auto Identification and Description (AID) service that the Consultant application uses to validate and complete auto information

entered by customers. The second service, Home Identification and Description (HID) performs the same function for home information, and is used by the Home Manager application. The Consultant application also uses the Virtual Agent

service to guide customers as they select coverage options, the Payment Processor service to arrange premium payments, and the Coverage Activator service to generate policies and put them in force.

ArchiSurance Mobile also relies on a number of technology services. The Home Manager application uses a Multimedia Repository service to store and retrieve information about insured homes. The Claim Manager application also uses this

service for claim information entered by customers. All three ArchiSurance Mobile applications use a Personal Security service to register and authenticate customers, and to manage their profiles.

Each application service is realized by an application component with the same name. Each technology service is realized by a system software environment, having the same name. ArchiSurance hosts both the application components and

system software environments in a virtualized server pool within its data center. Each service has its own virtual server. Each virtual server is connected to a data center network (DCN) which in turn connects to a commercial wide area network

(WAN).

Refer to the Scenario

You have been asked to show the applications that make up the ArchiSurance Mobile solution and the technology that supports these applications.

Which of the following answers provides the best description? Note that it is not necessary to model the networks.

A diagram of a server

AI-generated content may be incorrect.

A diagram of a server

AI-generated content may be incorrect. A diagram of a server

AI-generated content may be incorrect.

A diagram of a server

AI-generated content may be incorrect. A diagram of a software server

AI-generated content may be incorrect.

A diagram of a software server

AI-generated content may be incorrect. A diagram of a server

AI-generated content may be incorrect.

A diagram of a server

AI-generated content may be incorrect. A diagram of a process

Description automatically generated

A diagram of a process

Description automatically generated A diagram of process flow

Description automatically generated

A diagram of process flow

Description automatically generated A diagram of a process

Description automatically generated

A diagram of a process

Description automatically generated A diagram of a process

Description automatically generated

A diagram of a process

Description automatically generated A diagram of a server

Description automatically generated

A diagram of a server

Description automatically generated A diagram of a server

Description automatically generated

A diagram of a server

Description automatically generated A diagram of a computer server

Description automatically generated

A diagram of a computer server

Description automatically generated A diagram of a server

Description automatically generated

A diagram of a server

Description automatically generated